Java Coding Practice Summary before interview

- 提前准备好一个 Springboot 的IDEA项目

- 提前准备好一个纯 Java 用来写 algorithm 的IDEA项目

- Restful API

- Transactional Service

- Stream API (那种几行代码就写完的那种array超级简单的题目, 比如什么返回数组里比2大的数字):

- Java backend Q top 100 的第 45 题 (搜

45.)

- Java backend Q top 100 的第 45 题 (搜

- 如何override一个Java类的hashCode和equals

- Java backend Q top 100 的第 118 题 (搜

118.)

- Java backend Q top 100 的第 118 题 (搜

Contractor interview real Questions

Contractor interview real QBackend Questions Top 100

Java backend Q top 100Kafka & RabbitMQ

MQ notesSpring Newbie Notes

Spring Boot Newbie NotesSyllabus

| Session | Topic | Detailed Topics |

|---|---|---|

| 1 | JVM STRING FINAL | 1. Warm Up2. JVM Memory Management 3. JVM, JDK, JRE 4. Garbage Collection 5. String & StringBuilder & StringBuffer 6. Final, Finally, Finalize7. Immutable class (optional: basic syntax of java) |

| 2 | STATIC OOP | 1. Static 2. Marker Interface - Serializable, Cloneable 3. OOP 4. SOLID Principle 5. Reflection 6. Generics |

| 3 | COLLECTION | 1. Array vs ArrayList vs LinkedList 2. Set, TreeSet, LinkedHashSet 3. Map, LinkedHashMap, ConcurrentHashMap(how it works) 4. SynchronizedMap 5. Iterator vs Enumeration |

| 4 | EXCEPTION DESIGN PATTERN | 1. Design Pattern - Singleton, Factory, Observer, Proxy 2. Exception Type - compile, runtime, customized |

| 5 | THREADS | 1. MultiThreads Interaction (Synchronized, Atomic, ThreadLocal, Volatile) 2. Reentrant Lock 3. Executor and ThreadPool, ForkJoinPool 4. Future & CompletableFuture 5. Runnable vs Callable 6. Semaphore vs Mutex |

| 6 | JAVA8,17 | 1. Java 8: Functional Interface, Lambda, Stream API (map, filter, sorted, groupingBy etc), Optional, Default 2. Java 17: Sealed Class, advantage vs limitation, across package |

| 7 | SQL | 1. Primary Key, Normalization 2. Different type of Joins 3. Top asked SQLs - nth highest salary; highest salary each department; employee salary greater than manager 4. Introduce of Stored Procedure and Function 5. Cluster index vs Non - Cluster - Index 6. Explain Plan - what does it do, what can it tell |

| 8 | NOSQL | 1. SQL vs NoSQL 2. MongoDB vs Cassandra introduction3. ACID vs CAP rules explanation |

| 9 | REST API | 1. DispatcherServlet2. Rest API 3. How to create a good rest api 4. Http Error Code: 200, 201, 400, 401, 403, 404, 500, 502, 503, 504 5. Introduction of GraphQL, WebSocket, gRPC6. ReactiveJava |

| 10 | SPRING CORE | 1. IOC/DI 2. Bean Scope 3. Constructor vs Setter vs Field based injection |

| 11 | SPRING ANNOTATIONS | 1. Different spring annotations 2. @Controller vs @RestController 3. @Qualifier, @Primary4. Spring Cache and Retry |

| 12 | SPRING BOOT | 1. How to create spring boot from Scratch 2. Benefit of Spring boot 3. Annotation @SpringBootApplication 4. AutoConfiguration, how to disable 5. Actuator |

| 13 | SPRING BOOT2 | 1. Spring ActiveProfile2. AOP 3. @ExceptionHandler, @ControllerAdvice |

| 14 | DATA ACCESS | 1. JDBC, statement vs PreparedStatement, Datasource2. Hibernate ORM, Session, Cache 3. Optimistic Locking - add version column 4. Association: many - to - many |

| 15 | TRANSACTION JPA | 1. @Transactional - atomic operation 2. Propagation, Isolation 3. JPA naming convention4. Paging and Sorting Using JPA 5. Hibernate Persistence Context |

| 16 | SECURITY | 1. How to implement Security by overriding Spring class 2. Basic Authentication and password encryption 3. JWT Token and workflow 4. Oauth2 workflow5. Authorization based on User role |

| 17 | UNIT TEST |

1. Different Type of Tests in whole project lifecycle 2. Unit Test, Mock 3. Testing Rest Api with Rest Assured |

| 18 | AUTOMATION TEST |

1. BDD - Cucumber - annotations 2. Load Test with JMeter 3. Performance tool JProfiler 4. AB Test |

| 19 | MICROSERVICE | 1. Benefits/Disadvantage of MicroService 2. How to split monolithic to microservice 3. Circuit Breaker - concept, retry, fallback method 4. Load Balancer - concept and algorithms 5. API Gateway 6. Config Server |

| 20 | KAFKA |

1. Kafka - concepts, how it works and how message is sent to partition 2. Consumer Group, assignment strategy 3. Message in Order |

| 21 | KAFKA2 |

1. Kafka Duplicate Message 2. Kafka Message Loss 3. Poison Failure, DLQ 4. Kafka Security (SASL, ACLs, Encrypt etc) |

| 22 | DISTRIBUTED SYSTEM | 1. MicroService: how to communicate between services 2. Saga Pattern3. Monitoring: Splunk, Grafana, Kabana, CloudWatch etc4. System Design: distributed system |

| 23 | DEVOPS | 1. CICD 2. Jenkins pipeline with example 3. Git Commands: squash, cherry - pick etc4. On - Call: PageDuty etc5. How do you solve a production issue with or without log |

| 24 | KUBERNETES |

1. Kubernetes, EKS, WCNP, KubeCtl |

| 25 | CLOUD |

AWS Modules with examples |

Kubernetes

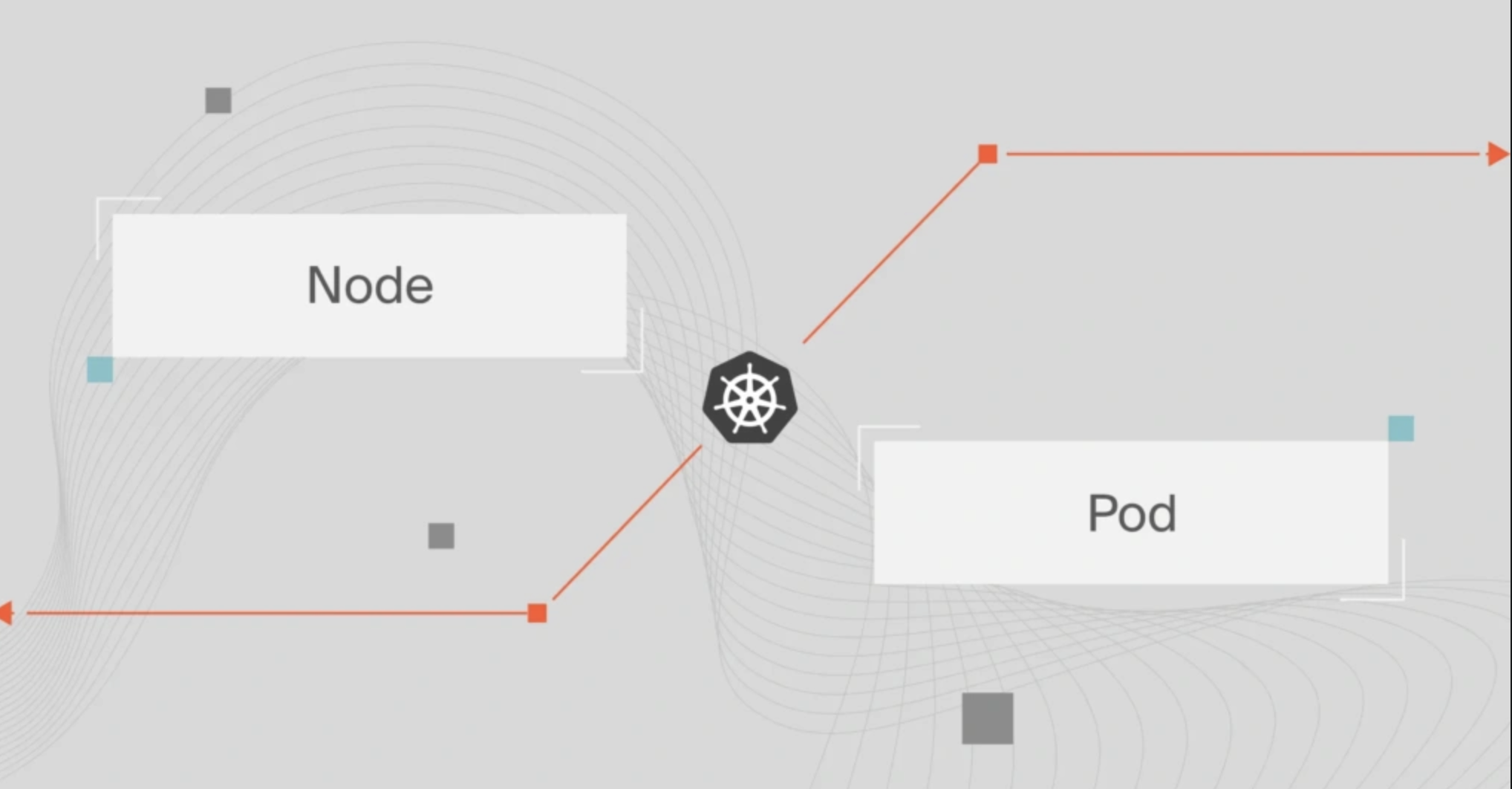

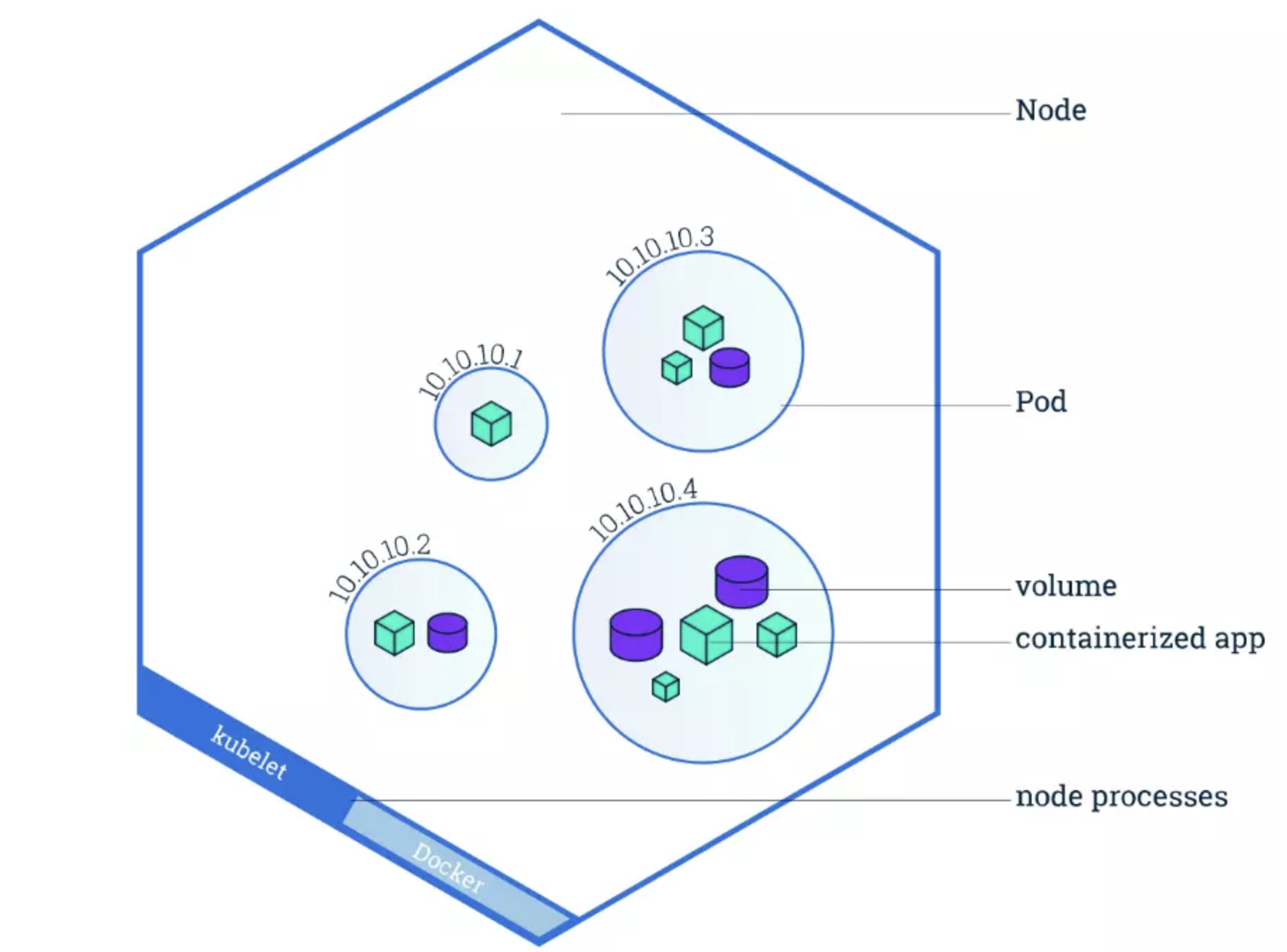

pod vs node in Kubernetes

本文由 简悦 SimpRead 转码, 原文地址 www.cloudzero.com

Kubernetes pods, nodes, and clusters get mixed up. Here’s a guide for beginners or if you just need t……

July 19, 2024 , 10 min read

Kubernetes pods, nodes, and clusters get mixed up. Here’s a simple guide for beginners or if you just need to reaffirm your knowledge of Kubernetes components.

Kubernetes is increasingly becoming the standard way to deploy, run, and maintain cloud-native applications that run inside containers. Kubernetes (K8s) automates most container management tasks, empowering engineers to manage high-performing, modern applications at scale.

Meanwhile, several surveys, including those from VMware and Gartner, suggest that inadequate expertise with Kubernetes has held back organizations from fully adopting containerization. So, maybe you’re wondering how Kubernetes components work.

In that case, we’ve put together a bookmarkable guide on pods, nodes, clusters, and more. Let’s dive right in, starting with the very reason Kubernetes exists; containers.

Quick Summary

Pod | Node | Cluster | |

Description | The smallest deployable unit in a Kubernetes cluster | A physical or virtual machine | A grouping of multiple nodes in a Kubernetes environment |

Role | Isolates containers from underlying servers to boost portability Provides the resources and instructions for how to run containers optimally | Provides the compute resources (CPU, volumes, etc) to run containerized apps | Has the control plane to orchestrate containerized apps through nodes and pods |

What it hosts | Application containers, supporting volumes, and similar IP addresses for logically similar containers | Pods with application containers inside them, kubelet | Nodes containing the pods that host the application containers, control plane, kube-proxy, etc |

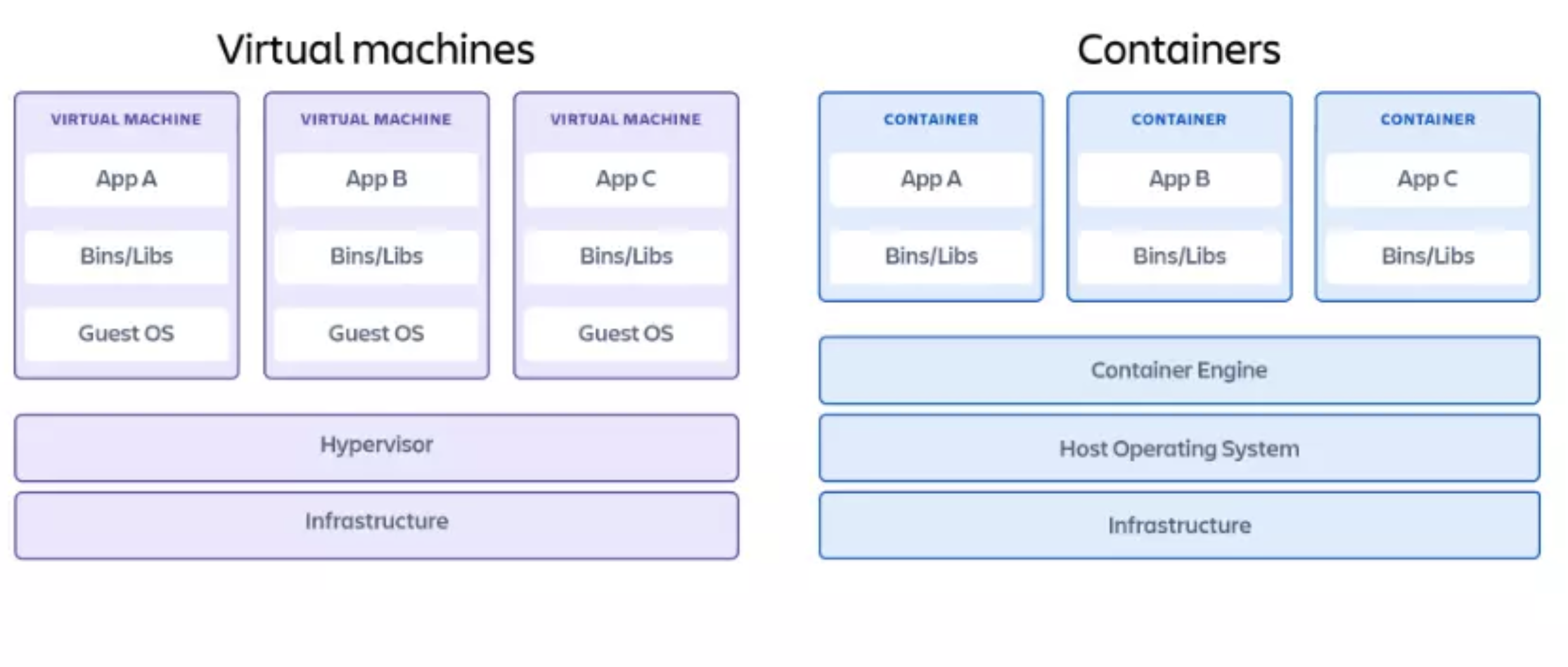

What Is A Container?

In software engineering, a container is an executable unit of software that packages and runs an entire application, or portions of it, within itself.

Containers comprise not only the application’s binary files, but also libraries, runtimes, configuration files, and any other dependencies that the application requires to run optimally. Talk about self-sufficiency.

Credit: Containers vs virtual machine architectures

This design enables a container to be an entire application runtime environment unto itself.

As a result, a container isolates the application it hosts from the external environment it runs on. This enables applications running in containers to be built in one environment and deployed in different environments without compatibility problems.

Also, because containers share resources and do not host their own operating system, they are leaner than virtual machines (VMs). This makes deploying containerized applications much quicker and more efficient than on contemporary virtual machines.

What Is A Containerized Application?

In cloud computing, a containerized application refers to an app that has been specially built using cloud-native architecture for running within containers. A container can either host an entire application or small, distributed portions of it (which are known as microservices).

Developing, packaging, and deploying applications in containers is referred to as containerization. Apps that are containerized can run in a variety of environments and devices without causing compatibility problems.

One more thing. Developers can isolate faulty containers and fix them independently before they affect the rest of the application or cause downtime. This is something that is extremely tricky to do with traditional monolithic applications.

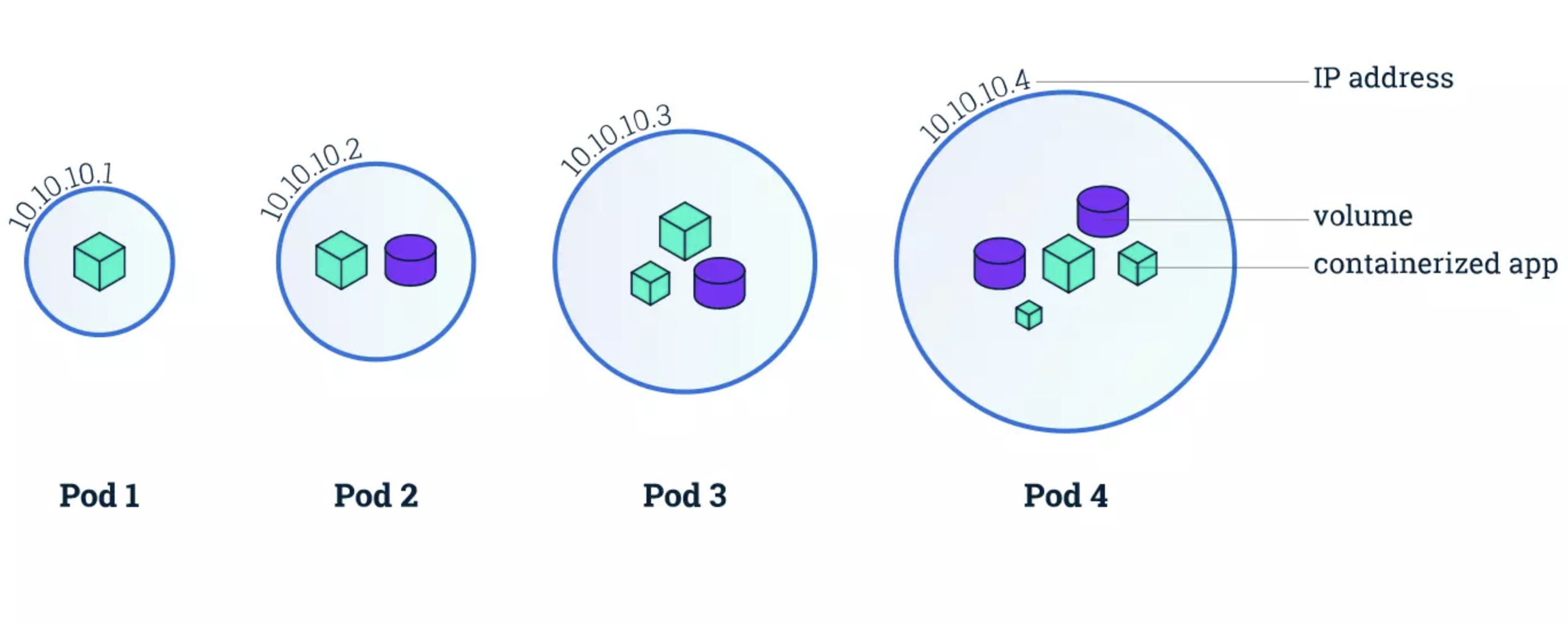

What Is A Kubernetes Pod?

A Kubernetes pod is a collection of one or more application containers.

The pod is an additional level of abstraction that provides shared storage (volumes), IP address, communication between containers, and hosts other information about how to run application containers. Check this out:

Credit: Kubernetes Pods architecture by Kubernetes.io

So, containers do not run directly on virtual machines and pods are a way to turn containers on and off.

Containers that must communicate directly to function are housed in the same pod. These containers are also co-scheduled because they work within a similar context. Also, the shared storage volumes enable pods to last through container restarts because they provide persistent data.

Kubernetes also scales or replicates the number of pods up and down to meet changing load/traffic/demand/performance requirements. Similar pods scale together.

Another unique feature of Kubernetes is that rather than creating containers directly, it generates pods that already have containers.

Also, whenever you create a K8s pod, the platform automatically schedules it to run on a Node. This pod will remain active until the specific process completes, resources to support the pod run out, the pod object is removed, or the host node terminates or fails.

Each pod runs inside a Kubernetes node, and each pod can fail over to another, logically similar pod running on a different node in case of failure. And speaking of Kubernetes nodes.

What Is A Kubernetes Node?

A Kubernetes node is either a virtual or physical machine that one or more Kubernetes pods run on. It is a worker machine that contains the necessary services to run pods, including the CPU and memory resources they need to run.

Now, picture this:

Credit: How Kubernetes Nodes work by Kubernetes.io

Each node also comprises three crucial components:

- Kubelet – This is an agent that runs inside each node to ensure pods are running properly, including communications between the Master and nodes.

- Container runtime – This is the software that runs containers. It manages individual containers, including retrieving container images from repositories or registries, unpacking them, and running the application.

- Kube-proxy – This is a network proxy that runs inside each node, managing the networking rules within the node (between its pods) and across the entire Kubernetes cluster.

Here’s what a Cluster is in Kubernetes.

What Is A Kubernetes Cluster?

-

Nodes usually work together in groups. A Kubernetes cluster contains a set of work machines (nodes). The cluster automatically distributes workload among its nodes, enabling seamless scaling.

Here’s that symbiotic relationship again.

A cluster consists of several nodes. The node provides the compute power to run the setup. It can be a virtual machine or a physical machine. A single node can run one or more pods.

Each pod contains one or more containers. A container hosts the application code and all the dependencies the app requires to run properly.

Something else. The cluster also comprises the Kubernetes Control Plane (or Master), which manages each node within it. The control plane is a container orchestration layer where K8s exposes the API and interfaces for defining, deploying, and managing containers’ lifecycles.

The master assesses each node and distributes workloads according to available nodes. This load balancing is automatic, ensures efficiency in performance, and is one of the most popular features of Kubernetes as a container management platform.

You can also run the Kubernetes cluster on different providers’ platforms, such as Amazon’s Elastic Kubernetes Service (EKS), Microsoft’s Azure Kubernetes Service (AKS), or the Google Kubernetes Engine (GKE).

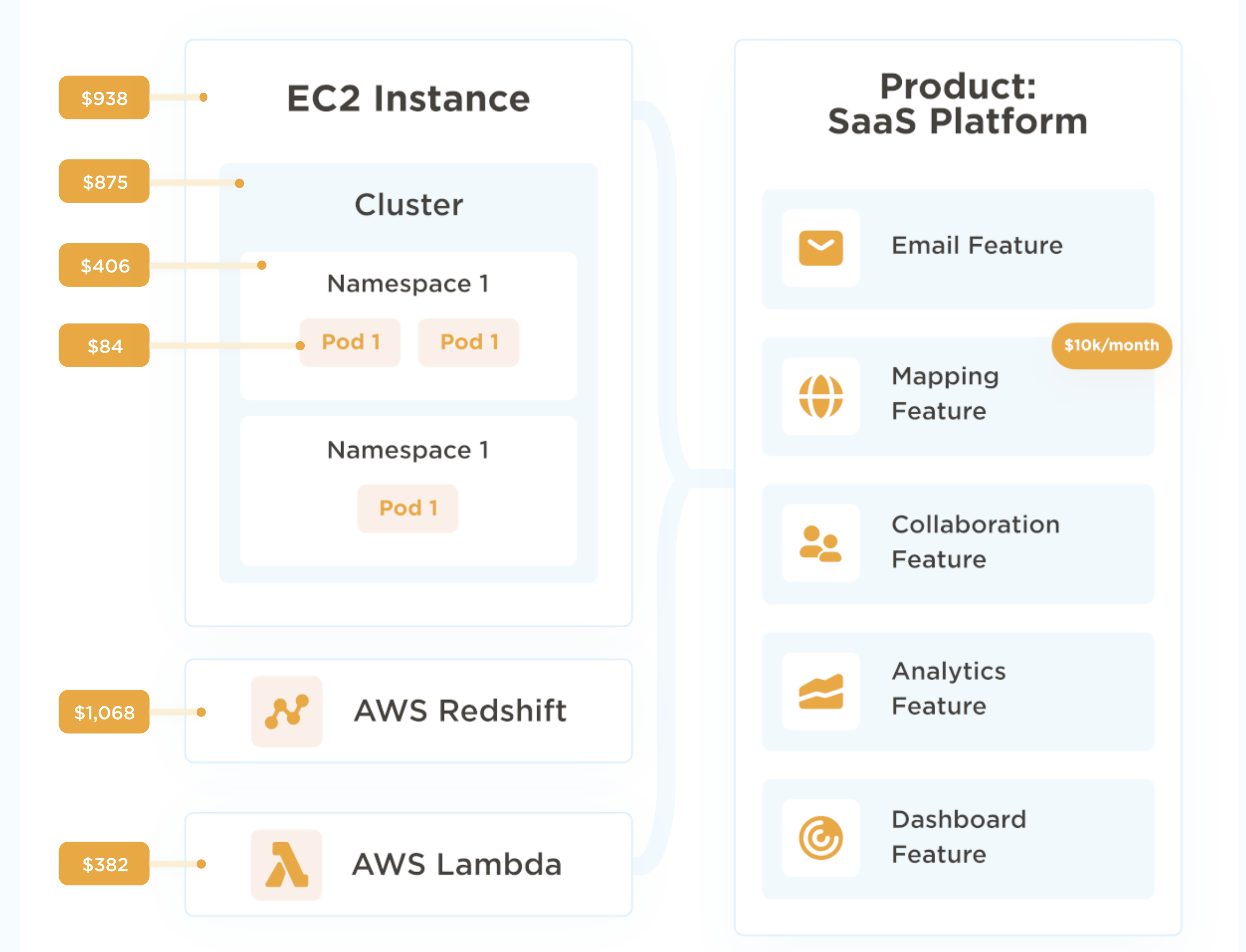

Take The Next Step: View, Track, And Control Your Kubernetes Costs With Confidence

Open-source, highly scalable, and self-healing, Kubernetes is a powerful platform for managing containerized applications. But as Kubernetes components scale to support business growth, Kubernetes cost management tends to get blindsided.

Most cost tools only display your total cloud costs, not how Kubernetes containers contributed. With CloudZero, you can view Kubernetes costs down to the hour as well as by K8s concepts such as, cost per pod, container, microservice, namespace, and cluster costs.

By drilling down to this level of granularity, you are able to find out what people, products, and processes are driving your Kubernetes spending.

You can also combine your containerized and non-containerized costs to simplify your analysis. CloudZero enables you to understand your Kubernetes costs alongside your AWS, Azure, Google Cloud, Snowflake, Databricks, MongoDB, and New Relic spend. Getting the full picture.

You can then decide what to do next to optimize the cost of your containerized applications without compromising performance. CloudZero will even alert you when cost anomalies occurs before you overspend.

to see these CloudZero Kubernetes Cost Analysis capabilities and more!

Kubernetes FAQ

Is a Kubernetes Pod a Container?

Yes, a Kubernetes pod is a group of one or more containers that share storage and networking resources. Pods are the smallest deployable units in Kubernetes and manage containers collectively, allowing them to run in a shared context with shared namespaces.

What is the difference between container node and pod?

A node is a worker machine in Kubernetes, part of a cluster, that runs containers and other Kubernetes components. A pod, on the other hand, is a higher-level abstraction that encapsulates one or more containers and their shared resources, managed collectively within a node.

Can a pod have multiple containers?

Yes, a Kubernetes pod can have multiple containers. Pods are designed to encapsulate closely coupled containers that need to share resources and communicate with each other over localhost. This approach facilitates running multiple containers within the same pod while treating them as a cohesive unit for scheduling, scaling, and management within the Kubernetes cluster.

How many pods run on a node?

The number of Kubernetes pods that can run on a node depends on various factors such as the node’s resources (CPU, memory, etc.), the resource requests and limits set by the pods, and any other applications or system processes running on the node.

Generally, a node can run multiple pods, and the Kubernetes scheduler determines pod placement based on available resources and scheduling policies defined in the cluster configuration.

Security

- How to implement Security by overriding Spring class

- You can implement security in a Spring application by overriding certain Spring security classes. For example, you can extend

WebSecurityConfigurerAdapter - and override methods like

configure(HttpSecurity http)to define custom security configurations such as access rules, authentication mechanisms, etc. - You can also override other classes like

UserDetailsServiceto provide custom user authentication and authorization logic.

- You can implement security in a Spring application by overriding certain Spring security classes. For example, you can extend

- Basic Authentication and password encryption

- Basic authentication is a simple authentication mechanism where the client sends the username and password in the request headers.

- In Spring, it can be configured easily. Password encryption is crucial for security.

- Spring provides various password encoding mechanisms like

BCryptPasswordEncoderto securely hash and store passwords. - When a user registers or changes their password, the password is encrypted and stored in the database, and during authentication, the provided password is encrypted and compared with the stored hash.

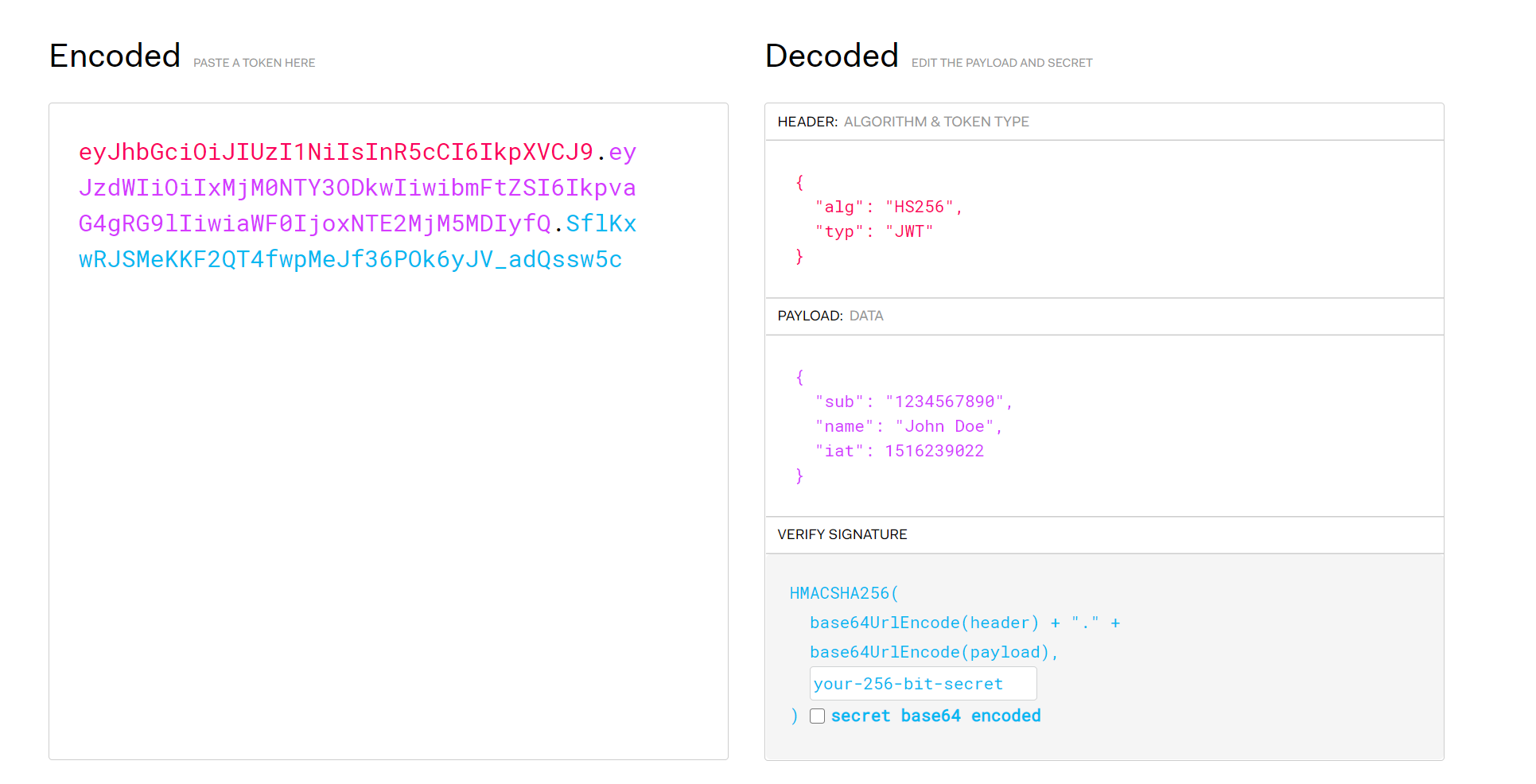

- JWT Token and workflow

- JSON Web Token (

JWT) is a widely used token-based authentication and authorization mechanism. - The workflow typically involves

- the client sending username and password to the server for authentication.

- If the authentication is successful, the server generates a

JWTtoken containing user information aspayloadand asignature.- The client then stores the token and sends it in the

headersof subsequent requests. - The server validates the token on each request and authorizes the user based on the information in the token.

- The client then stores the token and sends it in the

- Oauth2 workflow

- OAuth2 is an authorization framework that allows users to grant limited access to their resources on one server to another server without sharing their credentials.

- The typical OAuth2 workflow involves steps like

- the client redirecting the user to the authorization server for authentication and authorization,

- the user granting permission, the authorization server issuing an access token,

- and the client using the access token to access protected resources on the resource server.

- Authorization based on User role

- In a Spring security application, authorization based on user roles can be implemented by assigning different roles to users and configuring access rules based on those roles.

- You can use annotations like

@PreAuthorizeor configure access rules in the security configuration to specify which roles are allowed to access which resources or perform which operations. - For example, you can define that only users with the

ROLE_ADMINrole can access certain administrative endpoints.

- What is XSS attack and how to prevent it?

- XSS (Cross-Site Scripting) is a vulnerability where an attacker injects malicious scripts into a website.

These scripts run on users’ browsers, allowing attackers to steal data or perform actions on behalf of the user. - To prevent XSS, you should:

- Sanitize and escape user input.

- Use Content Security Policy (CSP).

- Set HttpOnly and Secure flags for cookies.

- Avoid inserting user input directly into the HTML without validation.

- XSS (Cross-Site Scripting) is a vulnerability where an attacker injects malicious scripts into a website.

- What is CSRF attack and how to prevent it?

- CSRF (Cross-Site Request Forgery) is an attack where an attacker tricks a user into making unwanted requests to a website on which they are authenticated.

This can result in unauthorized actions, such as changing account settings or making purchases. - To prevent CSRF, you should:

- Use anti-CSRF tokens in forms.

- Implement SameSite cookie attributes.

- Ensure that sensitive actions require additional authentication (like a CAPTCHA).

- Check the

Refererheader to validate requests.

- CSRF (Cross-Site Request Forgery) is an attack where an attacker tricks a user into making unwanted requests to a website on which they are authenticated.

Authorization based on User role using Spring Security

In Spring Security, you can implement role-based authorization by assigning roles to users and restricting access to certain endpoints or methods based on those roles.

Step-by-Step: Role-Based Authorization in Spring

1. Assign Roles to Users

Typically in your UserDetailsService implementation or user entity:

|

⚠️ Prefix roles with

ROLE_— it’s required by Spring Security.

2. Secure Endpoints Based on Role

Using HttpSecurity in a SecurityConfig class:

|

3. Method-Level Authorization (Optional)

Use annotations with @EnableMethodSecurity:

("hasRole('ADMIN')") |

Summary

| Component | Purpose |

|---|---|

SimpleGrantedAuthority |

Assign roles to user |

hasRole("ROLE_NAME") |

Protect endpoints/methods by role |

@PreAuthorize |

Method-level security (optional) |

SecurityFilterChain |

Define path-based access control |

XSS

XSS (Cross-Site Scripting) is a type of security vulnerability where an attacker injects malicious scripts into trusted websites. When a user visits the site, the script runs in their browser, potentially stealing cookies, session tokens, or sensitive data.

Types of XSS

| Type | Description |

|---|---|

| Stored XSS | Malicious script is permanently stored on the server (e.g., in a database or comment). |

| Reflected XSS | Script is immediately returned by the server (e.g., in URL parameters). |

| DOM-based XSS | Script is injected via client-side JavaScript manipulation, without server interaction. |

Example of XSS

function escape(s) { |

如果输入的 s 为 ");alert(1);// ,则将 return <script>console.log("");alert(1);//");</script> , 这就会弹出警告窗口 alert(1) 这就是恶意脚本注入

How to Prevent XSS

1. Escape Output

- Always escape user input before injecting it into HTML, JS, or attributes.

- Use libraries like DOMPurify (for HTML) or encoding functions (

encodeURIComponent, etc).

2. Use Safe APIs

- Prefer

textContent,createTextNodeoverinnerHTML.

3. Validate Input

- Use server-side and client-side validation to restrict allowed content.

4. Use Content Security Policy (CSP)

- Prevents execution of inline scripts or loading from untrusted sources.

5. Sanitize User Input

- Strip or neutralize dangerous code via input sanitization libraries (e.g., DOMPurify).

Summary

XSS exploits trust between users and websites.

Defense = sanitize, escape, validate, and use secure APIs.

CSRF

CSRF(Cross-Site Request Forgery),即跨站请求伪造,是一种常见的网络攻击方式。下面为你详细解释 CSRF 但不涉及本项目代码:

和JWT的关系

在某些情况下,比如使用无状态的 RESTful API(如使用 JWT 进行身份验证,是无状态的),可以考虑禁用 CSRF 防护,因为 JWT 本身已经提供了一定的安全性,且 RESTful API 通常通过其他方式(如令牌验证)来确保请求的合法性。

但在一些有状态的应用中,如传统的基于会话的 Web 应用,通常需要开启 CSRF 防护

攻击原理

- 用户认证:用户在访问某个受信任的网站 A 时,进行了登录操作,网站 A 会在用户的浏览器中保存用户的认证信息,比如会话 Cookie 。

- 恶意网站诱导:攻击者构建一个恶意网站 B,当用户在访问恶意网站 B 时,网站 B 会利用一些手段(比如自动提交表单等)向受信任的网站 A 发送一个请求。由于用户的浏览器中保存了网站 A 的认证信息,这个请求会携带用户的认证信息(如 Cookie)发送到网站 A。

- 网站 A 处理请求:网站 A 收到请求后,因为请求中包含了用户的合法认证信息,会误以为是用户自己发起的请求,从而执行相应的操作,比如修改用户的密码、转账等。

常见的攻击场景

- 自动提交表单:恶意网站包含一个隐藏的表单,表单的 action 属性指向受信任网站的某个敏感操作接口,当用户访问恶意网站时,表单会自动提交,从而触发对受信任网站的攻击请求。

- 图片标签攻击:攻击者在恶意网站中使用

<img>标签,将其src属性设置为受信任网站的某个敏感操作接口,当用户访问恶意网站时,浏览器会自动请求该图片,从而触发对受信任网站的攻击请求。

防范措施

使用 CSRF Token

- 原理:服务器在生成页面时,会为每个用户的请求生成一个唯一的

CSRF Token,并将其嵌入到页面中(比如作为隐藏表单字段或者请求头)。当用户提交表单或者发送请求时,必须携带这个CSRF Token。服务器在接收到请求时,会验证请求中的CSRF Token是否与服务器生成的一致,如果不一致则拒绝请求。 - 示例:在表单中添加

CSRF Token:<form action="/transfer" method="post">

<input type="hidden" name="csrf_token" value="{{ csrf_token }}">

<!-- 其他表单字段 -->

<input type="submit" value="Transfer">

</form>

- 原理:服务器在生成页面时,会为每个用户的请求生成一个唯一的

检查请求的

Referer头- 原理:服务器在接收到请求时,检查请求的

Referer头,确保请求是从本网站的页面发起的。如果Referer头为空或者指向其他域名,则拒绝请求。 - 缺点:

Referer头可以被篡改,并且有些用户可能会禁用Referer头,因此这种方法不是非常可靠,通常作为辅助手段使用。

- 原理:服务器在接收到请求时,检查请求的

- 使用 SameSite Cookie 属性

- 原理:SameSite 是一个 Cookie 属性,用于控制 Cookie 在跨站请求时的发送行为。可以将 Cookie 的

SameSite属性设置为Strict或Lax。Strict表示 Cookie 只能在同一站点的请求中发送,Lax表示 Cookie 可以在一些安全的跨站请求(如 GET 请求)中发送。 - 示例:在设置 Cookie 时添加

SameSite属性:// Java 示例

Cookie cookie = new Cookie("session_id", "123456");

cookie.setSameSite("Strict");

response.addCookie(cookie);

- 原理:SameSite 是一个 Cookie 属性,用于控制 Cookie 在跨站请求时的发送行为。可以将 Cookie 的

总之,CSRF 是一种严重的安全威胁,开发人员在开发 Web 应用时需要采取有效的防范措施来保护用户的信息安全。

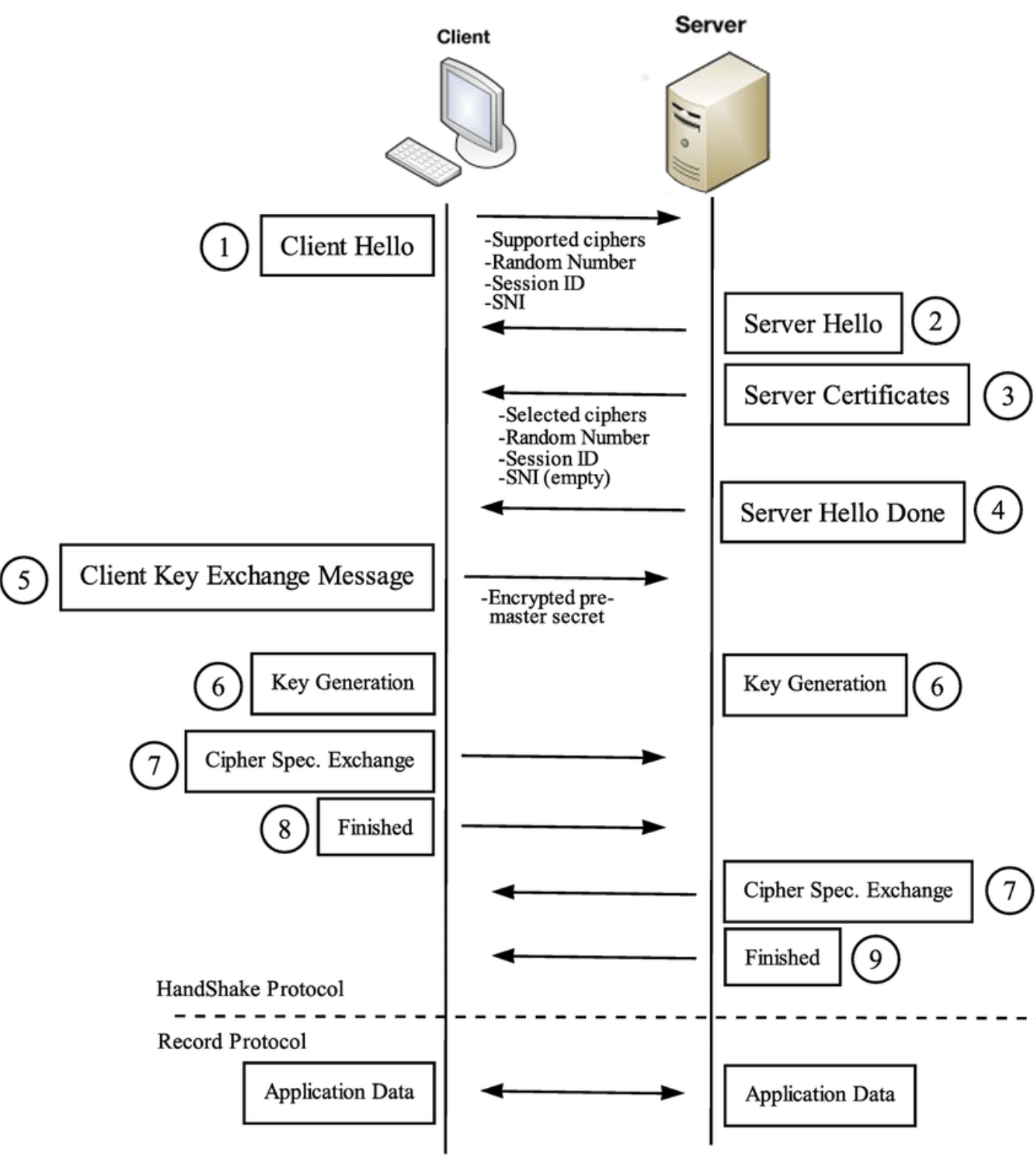

SSL 3 ways handshake

It goes roughly as follows:

- The ‘client hello’ message: The client initiates the handshake by sending a “hello” message to the server. The message will include which TLS version the client supports, the cipher suites supported, and a string of random bytes known as the “client random.”

- The ‘server hello’ message: In reply to the client hello message, the server sends a message containing the server’s SSL certificate, the server’s chosen cipher suite, and the “server random,” another random string of bytes that’s generated by the server.

- Authentication: The client verifies the server’s SSL certificate with the certificate authority that issued it. This confirms that the server is who it says it is, and that the client is interacting with the actual owner of the domain.

- The premaster secret: The client sends one more random string of bytes, the “premaster secret.” The premaster secret is encrypted with the public key and can only be decrypted with the private key by the server. (The client gets the public key from the server’s SSL certificate.)

- Private key used: The server decrypts the premaster secret.

- Session keys created: Both client and server generate session keys from the client random, the server random, and the premaster secret. They should arrive at the same results.

- Client is ready: The client sends a “finished” message that is encrypted with a session key.

- Server is ready: The server sends a “finished” message encrypted with a session key.

- Secure symmetric encryption achieved: The handshake is completed, and communication continues using the session keys.

REST API

RESTful API Versioning Strategy

I’ve implemented API versioning to ensure backward compatibility as the API evolves. The primary goal is to allow clients to continue using old versions while new features or changes are introduced in newer versions.

Common strategies I’ve used:

1. URI Path Versioning (most common in my experience)

- Example:

GET /api/v1/users - Pros: Easy to implement, clear version separation.

- Cons: Breaks RESTful resource URIs; not as flexible for granular versioning.

2. Header Versioning (for internal or advanced clients)

- Example:

GET /userswith headerAPI-Version: 2 - Pros: Keeps URIs clean; supports gradual version rollouts.

- Cons: Harder to test/debug; tooling may not support.

3. Query Parameter Versioning (used sparingly)

- Example:

GET /users?version=2 - Pros: Simple to implement.

- Cons: Can be misused; not ideal for long-term versioning.

Strategy Considerations:

- I use URI path versioning for public APIs where clarity and long-term support matter most.

- For internal or mobile clients, I prefer header versioning to avoid breaking changes and allow A/B testing.

- I always maintain proper documentation for each version and use semantic versioning principles where feasible (major changes require a new version).

URI path versioning example

|

This isolates version logic cleanly and allows parallel support.

Header-Based API Versioning Example

This approach uses @RequestMapping conditions to route based on custom headers, typically like API-Version: 1.

Controller Implementation:

|

DTO Classes:

public class UserDto { |

Client Request Examples:

GET /api/users |

GET /api/users |

Pros of Header-Based Versioning:

- Keeps the endpoint clean (

/api/users) - Allows smooth version rollout

- Compatible with gateway-based version negotiation

Caveat: Requires clients to be aware of and explicitly set headers, which may complicate browser-based calls or debugging.

header-based API versioning using Spring Cloud Gateway

Here is a practical example of implementing header-based API versioning using Spring Cloud Gateway alongside Spring Boot controllers to route requests based on the API-Version header.

Spring Cloud Gateway Configuration (application.yml)

spring: |

- Requests with header

API-Version: 1route to backend service on port 8081 (v1). - Requests with header

API-Version: 2route to backend service on port 8082 (v2).

Spring Boot User Service V1 (running on port 8081)

|

Spring Boot User Service V2 (running on port 8082)

|

Summary

- Gateway routes requests based on

API-Versionheader to different backend versions. - Backend services implement respective API versions independently.

- This enables seamless side-by-side deployment and independent scaling.

- Clients specify version by setting the

API-VersionHTTP header.

DispatcherServlet

Definition

DispatcherServlet is a key component in the Spring Web MVC framework. It serves as the front - controller in a Spring - based web application. A front - controller is a single servlet that receives all HTTP requests and then dispatches them to the appropriate handlers (controllers) based on the request’s URL, HTTP method, and other criteria.

Function

- Request Routing: It maps incoming requests to the appropriate

@Controllerclasses and their methods using the configured handler mappings. For example, it can match a request to a specific controller method based on the URL pattern defined in the@RequestMappingannotation. - View Resolution: After a controller method processes the request and returns a logical view name, the

DispatcherServletuses a view resolver to map this logical name to an actual view (such as a JSP page or a Thymeleaf template) and renders the response. - Intercepting and Pre - processing: It can also use interceptors to perform pre - processing and post - processing tasks on requests and responses, like logging, authentication checks, etc.

Rest API

Definition

REST (Representational State Transfer) is an architectural style for building web services. A REST API (Application Programming Interface) is a set of rules and conventions for creating and consuming web services based on the REST principles.

Characteristics

- Stateless: Each request from a client to a server must contain all the information necessary to understand and process the request. The server does not store any client - specific state between requests.

- Resource - Oriented: Resources are the key abstractions in a REST API. Resources can be things like users, products, or orders, and are identified by unique URIs (Uniform Resource Identifiers).

- HTTP Verbs: REST APIs use standard HTTP methods (verbs) to perform operations on resources. For example,

GETis used to retrieve a resource,POSTto create a new resource,PUTto update an existing resource, andDELETEto remove a resource.

How to create a good REST API

Design Principles

- Use Clear and Descriptive URIs: URIs should clearly represent the resources. For example, use

/usersto represent a collection of users and/users/{userId}to represent a specific user. - Follow HTTP Verbs Correctly: Use

GETfor retrieval,POSTfor creation,PUTfor full - update,PATCHfor partial - update, andDELETEfor deletion. - Return Appropriate HTTP Status Codes: Indicate the result of the request clearly. For example, return 200 for successful retrievals, 201 for successful creations, and 4xx or 5xx for errors.

- Provide Good Documentation: Use tools like Swagger to generate documentation that explains the API endpoints, their input parameters, and expected output.

Security and Performance

- Authentication and Authorization: Implement proper authentication mechanisms (e.g., OAuth, JWT) to ensure that only authorized users can access the API.

- Caching: Implement caching strategies to reduce the load on the server and improve response times.

HTTP Error Codes

- 200 OK: Indicates that the request has succeeded. It is commonly used for successful

GETrequests to retrieve a resource or successfulPUT/PATCHrequests to update a resource. - 201 Created: Used when a new resource has been successfully created. For example, when a client sends a

POSTrequest to create a new user, and the server successfully creates the user, it returns a 201 status code. - 400 Bad Request: Signifies that the server cannot process the request due to a client - side error, such as malformed request syntax, invalid request message framing, or deceptive request routing.

- 401 Unauthorized: Indicates that the request requires user authentication. The client needs to provide valid credentials to access the requested resource.

- 403 Forbidden: The client is authenticated, but it does not have permission to access the requested resource. For example, a regular user trying to access an administrative - only endpoint.

- 404 Not Found: The requested resource could not be found on the server. This might be because the URL is incorrect or the resource has been deleted.

- 500 Internal Server Error: A generic error message indicating that the server encountered an unexpected condition that prevented it from fulfilling the request. It could be due to a programming error, database issues, etc.

- 502 Bad Gateway: The server, while acting as a gateway or proxy, received an invalid response from an upstream server.

- 503 Service Unavailable: The server is currently unable to handle the request due to temporary overloading or maintenance. The client may try again later.

- 504 Gateway Timeout: The server, while acting as a gateway or proxy, did not receive a timely response from an upstream server.

Introduction of GraphQL, WebSocket, gRPC

GraphQL

- Definition: GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data. It allows clients to specify exactly what data they need from an API, reducing over - fetching and under - fetching of data.

- Advantages: It provides a more efficient way of data retrieval compared to traditional REST APIs, especially in complex applications where clients may need different subsets of data. It also has a strong type system and can be introspected by clients.

以下是关于 GraphQL 和 RESTful 的对比表格,从多个方面详细阐述了它们的特点和差异:

| 对比维度 | RESTful | GraphQL |

|---|---|---|

| 数据获取方式 | 通常以固定的端点(Endpoints)获取资源,每个端点返回固定结构的数据。例如,/users 端点返回所有用户列表,/users/{id} 返回特定用户详细信息。如果客户端需要多个不同资源的数据,可能需要多次请求不同的端点。 |

客户端可以精确指定需要的数据字段,服务器只返回所请求的数据。通过在查询中定义字段,可以在一次请求中获取多个相关资源的数据,避免过度获取或不足获取数据的问题。 |

| 数据传输量 | 可能会返回过多不必要的数据(过度获取),或者客户端需要多次请求才能获取完整所需数据(不足获取),导致数据传输量较大或请求次数增多。例如,客户端只需要用户的姓名和邮箱,但 /users/{id} 端点返回了用户的所有详细信息,包括地址、电话等。 |

只返回客户端请求的数据字段,减少了不必要的数据传输,提高了数据传输效率,尤其在移动设备等带宽有限的场景下更具优势。 |

| 版本控制 | 一般通过在 URL 中添加版本号(如 /v1/users、/v2/users)来进行版本控制。新的版本可能会对端点的结构和功能进行修改,客户端需要明确区分不同版本并进行相应调整。 |

由于客户端自定义查询,服务器端的字段增减或修改不一定会影响客户端已有的查询。如果需要对数据模型进行修改,可以在不破坏现有客户端查询的前提下进行,因此在版本控制方面相对灵活,不需要像 RESTful 那样严格的版本区分。 |

| 缓存策略 | 可以利用 HTTP 缓存机制,如 Cache-Control、ETag 等进行缓存。但由于每个端点返回的数据结构相对固定,缓存粒度较粗,可能会出现缓存失效或缓存不命中的情况。 |

缓存相对复杂,因为每个客户端的查询可能不同。可以通过在服务器端实现自定义的缓存策略,针对具体的查询进行缓存,但需要更多的开发和维护工作。 |

| 错误处理 | 通常使用 HTTP 状态码来表示请求的结果,如 200 表示成功,404 表示资源未找到,500 表示服务器内部错误等。对于更详细的错误信息,可能需要在响应体中返回。 |

可以在响应中返回详细的错误信息,包括错误位置(在查询中的位置)和错误描述,帮助客户端更准确地定位和处理错误。 |

| 开发效率 | 开发人员需要为每个资源和操作定义端点,当需求变化或新增功能时,可能需要修改或新增多个端点,开发和维护成本较高。 | 开发人员定义数据模型和 GraphQL 模式(Schema),客户端根据模式进行查询。由于客户端有更多的自主性,服务器端的开发和修改相对集中在模式的更新上,一定程度上提高了开发效率。 |

| 学习曲线 | 基于 HTTP 和 REST 原则,概念相对简单直观,开发人员容易理解和上手,尤其对于有 Web 开发经验的人员。 | 需要学习 GraphQL 的语法、模式定义、查询和变更(Mutation)等概念,对于初学者来说可能有一定的学习成本,但掌握后可以更灵活地进行数据交互。 |

| 生态系统和工具支持 | 有丰富的工具和框架支持,如 Express(Node.js)、Django REST framework(Python)等,并且与现有的 Web 技术和基础设施兼容性好。 | 生态系统在不断发展壮大,有许多优秀的客户端和服务器端库,如 Apollo Server(Node.js)、Relay(React)等,但相对 RESTful 生态系统的成熟度和普及度可能稍逊一筹。 |

以下是一个简单的示意图来直观展示 GraphQL 和 RESTful 在数据获取上的差异:

RESTful 数据获取示例:客户端 ----> GET /users/{id} ----> 服务器

(获取用户详细信息,可能包含过多不需要字段)

客户端 ----> GET /posts?userId={id} ----> 服务器

(获取该用户的文章,需额外请求)

GraphQL 数据获取示例:客户端 ----> POST /graphql {

user(id: "{id}") {

name

email

posts {

title

content

}

}

} ----> 服务器

(一次请求获取用户信息及相关文章,精确获取所需字段)

希望以上表格和说明能帮助你更好地理解 GraphQL 和 RESTful 的区别。如果还有其他疑问,可以继续向我提问。

WebSocket

- Definition: WebSocket is a communication protocol that provides full - duplex communication channels over a single TCP connection. It enables real - time communication between a client and a server.

- Advantages: It reduces the overhead of traditional HTTP requests by maintaining a persistent connection, which is suitable for applications that require real - time updates, such as chat applications, online gaming, and live dashboards.

gRPC

- Definition: gRPC is a high - performance, open - source universal RPC (Remote Procedure Call) framework. It uses Protocol Buffers as the interface definition language and serialization format.

- Advantages: It offers high performance, low latency, and strong typing. It is suitable for microservices architectures where efficient communication between services is crucial.

Server Sent Events (SSE)

• Lastly, Server Sent Events (SSE) are a great way to send updates from the server to the client. They’re similar to long polling, but they’re more efficient for unidirectional communication from the server to the client. SSE allows the server to push updates to the client whenever new data is available, without the client having to make repeated requests as in long polling. This is achieved through a single, long-lived HTTP connection, making it more suitable for scenarios where the server frequently updates data that needs to be sent to the client. Unlike Websockets, SSE is designed specifically for server-to-client communication and does not support client-to-server messaging. This makes SSE simpler to implement and integrate into existing HTTP infrastructure, such as load balancers and firewalls, without the need for special handling.

ReactiveJava响应式编程

Java响应式编程一般基于Reactive Streams规范,常用实现包括Project Reactor和RxJava。实现步骤和核心思路如下:

核心思路

- 声明式编程:定义数据流的处理逻辑(过滤、转换、合并等),而非命令式地逐步操作。

- 异步非阻塞:数据生产和消费异步执行,不阻塞线程,提高资源利用率。

- 背压(Back-pressure)支持:消费者控制生产者的数据流速,避免过载。

- 流(Flux/Observable)和单值(Mono/Single)区分:分别表示多值和单值异步数据流。

实现步骤(以Project Reactor为例)

- 引入依赖

<dependency> |

- 创建数据流

- 使用

Flux表示多元素流,Mono表示单元素流。

Flux<String> flux = Flux.just("A", "B", "C"); |

- 链式操作处理数据

- 过滤、转换、合并、延迟等。

flux.filter(s -> s.startsWith("A")) |

- 异步执行调度

- 通过

scheduler切换线程池,实现异步非阻塞。

flux.publishOn(Schedulers.boundedElastic()) |

- 处理背压

- 使用操作符如

onBackpressureBuffer(),onBackpressureDrop()等。

flux.onBackpressureBuffer(100); |

- 与外部系统集成

- 结合响应式数据库驱动(R2DBC)、消息队列(Reactor Kafka)、Web框架(Spring WebFlux)等。

简单示例

Flux.range(1, 10) |

总结

实现Java响应式编程,核心是基于Flux和Mono等响应式类型,结合声明式操作符和异步调度。通过背压机制保证系统稳健。Project Reactor和RxJava是最主流的两种实现,推荐优先掌握Reactor,尤其在Spring生态中应用广泛。

好的,下面给你一个处理背压的具体、完整 Java 示例,使用 Project Reactor 的 Flux 实现。

处理背压back-pressure的例子

下面给你一个基于 Kafka 的 Java 实时订单系统的完整示例,重点展示如何使用 Reactor Kafka 来处理背压(Back-pressure),防止消费者过载。

场景描述

- 订单从 Kafka 主题

orders中流入。 - 每条订单都需要进行复杂业务处理(如写数据库)。

- 如果消费处理速度跟不上 Kafka 推送速度,我们需要做背压处理。

技术栈

- Kafka:消息中间件,用于高吞吐实时流。

- Reactor Kafka:响应式 Kafka 客户端,支持 back-pressure。

- Spring Boot(可选):集成方便。

- R2DBC(可选):响应式数据库连接。

示例代码(核心逻辑)

import reactor.kafka.receiver.KafkaReceiver; |

关键点说明

| 技术/操作 | 作用 |

|---|---|

KafkaReceiver.receive() |

返回一个 Flux |

onBackpressureBuffer(1000) |

如果消费不及时,最多缓存 1000 条订单;超过的丢弃或报警 |

flatMap(..., 10) |

同时最多处理 10 条订单,控制并发,间接实现 back-pressure |

acknowledge() |

手动提交 offset,确保“处理完才提交” |

实战建议

- 数据入库用 R2DBC 实现真正的 end-to-end 异步。

- 若不想丢数据,可将超出 back-pressure 的数据写入 Dead Letter Topic。

- 结合指标系统(如 Prometheus)监控 lag、吞吐、队列大小。

总结

这是一个典型的 响应式 Kafka + 背压控制 + 并发限流 的应用场景,适合用于订单、支付、风控等高并发实时业务中。相较传统阻塞消费者,响应式消费者更能充分利用资源、控制负载、保证系统稳定性。

Test

1. Different Type of Tests in whole project lifecycle

- Unit Tests: These are the most granular level of tests. They focus on testing individual units of code, such as a single function, method, or class. Unit tests are usually written by developers and are aimed at verifying that a particular piece of code behaves as expected in isolation. They help in catching bugs early in the development process and make the code easier to maintain.

- Integration Tests: These tests check how different components or modules of the system work together. They ensure that the interfaces between various parts of the application are functioning correctly. For example, in a software system with a database layer, a business logic layer, and a presentation layer, integration tests would verify that data can flow properly between these layers.

- System Tests: System tests evaluate the entire system as a whole to ensure that it meets the specified requirements. They simulate real-world scenarios and user interactions to test the system’s functionality, performance, and usability. This includes testing all the components together in the production-like environment.

- Acceptance Tests: These tests are performed to determine whether the system meets the business requirements and is acceptable to the end-users or stakeholders. Acceptance tests can be user acceptance tests (UAT), where end-users test the system to see if it meets their needs, or contract acceptance tests, which are based on the requirements specified in a contract.

- Regression Tests: After making changes to the system, such as bug fixes or new feature implementations, regression tests are run to ensure that the existing functionality has not been broken. They are a subset of the overall test suite that focuses on the areas of the system that are likely to be affected by the changes.

2. Unit Test, Mock

- Unit Test: A unit test is a piece of code that exercises a specific unit of functionality in an isolated way. It provides a set of inputs to the unit under test and verifies that the output is as expected. Unit tests should be fast, independent, and repeatable. For example, in a Java application, a unit test for a method that calculates the sum of two numbers would provide different pairs of numbers as inputs and check if the calculated sum is correct.

- Mock: In unit testing, a mock is an object that mimics the behavior of a real object, such as a database, a web service, or another component. Mocks are used when the real object is difficult to create, expensive to set up, or not available during testing. For instance, if a unit of code depends on a database call, instead of actually connecting to the database, a mock object can be used to return predefined data. This allows the unit test to focus on testing the logic of the unit under test without being affected by the external dependencies.

3. Testing Rest Api with Rest Assured

Rest Assured is a Java library used for testing RESTful APIs. It simplifies the process of sending HTTP requests to an API and validating the responses.

- Sending Requests: With Rest Assured, you can easily send different types of HTTP requests like GET, POST, PUT, DELETE, etc. For example, to send a GET request to an API endpoint, you can use code like

given().when().get("https://example.com/api/endpoint").then(); - Validating Responses: You can validate various aspects of the response, such as the status code (e.g.,

then().statusCode(200);to check if the response has a 200 status code), the headers, and the body. You can use methods to extract data from the response body and perform assertions on it. For instance, if the API returns JSON data, you can use JsonPath expressions in Rest Assured to extract and validate specific fields in the JSON.

4. AUTOMATION TEST

- BDD - Cucumber - annotations: Behavior-Driven Development (BDD) is an approach that focuses on defining the behavior of the system from the perspective of the stakeholders. Cucumber is a popular tool for implementing BDD in Java (and other languages). Annotations in Cucumber are used to mark different parts of the feature files and step definitions. For example,

@Given,@When,@Thenare commonly used annotations in step definitions.@Givenis used to set up the preconditions,@Whendescribes the action being performed, and@Thenis used to define the expected outcome. Feature files written in Gherkin language (a simple syntax used by Cucumber) use these annotations to describe the behavior of the system in a human-readable format. - Load Test with JMeter: Apache JMeter is a tool used for load testing web applications, web services, and other types of applications. It can simulate a large number of concurrent users sending requests to the application to measure its performance under load. You can configure JMeter to define the number of threads (simulating users), the ramp-up period (how quickly the users are added), and the duration of the test. It can generate detailed reports on metrics such as response times, throughput, and error rates, helping you identify bottlenecks in the application.

- Performance tool JProfiler: JProfiler is a powerful Java profiling tool used for performance analysis. It can help you identify performance issues in your Java applications by analyzing memory usage, CPU utilization, and thread behavior. It allows you to take snapshots of the application’s state at different times, trace method calls, and find memory leaks. You can use JProfiler to optimize your code by identifying methods that consume a lot of resources and improving their performance.

- AB Test: AB testing is a method of comparing two versions (A and B) of a web page, application feature, or marketing campaign to determine which one performs better. In AB testing, a random subset of users is shown version A, and another random subset is shown version B. Metrics such as click-through rates, conversion rates, or user engagement are then measured for each version. Based on the results, you can decide which version to implement permanently. AB testing is often used in web development and digital marketing to make data-driven decisions about changes to the product or service.

Database

- What is data modeling? Why do we need it? When would you need it?

- What is primary key? How is it different from unique key?

- What is normalization? Why do you need to normalize?

- What does data redundancy mean? Can you give an example of each?

- What is database integrity? Why do you need it?

- What are joins and explain different types of joins in detail.

- Explain indexes and why are they needed?

- If we have 1B data in our relational database and we do not want to fetch all at once. What are the ways that we can partition the data rows?

Explain clustered and non-clustered index and their differences.

1. Clustered Index

Definition

A clustered index determines the physical order of data storage in a table. In other words, the rows of the table are physically arranged on disk in the order of the clustered index key. A table can have only one clustered index because there can be only one physical ordering of the data rows.

How it Works

- Index Structure: The clustered index is often implemented as a B - tree data structure. The leaf nodes of the B - tree contain the actual data rows of the table, sorted according to the index key.

- Data Retrieval: When you query data using the columns in the clustered index, the database can quickly locate the relevant rows because they are physically stored in the order of the index. For example, if you have a

Customerstable with a clustered index on theCustomerIDcolumn, and you query for a specificCustomerID, the database can efficiently navigate through the B - tree to find the corresponding row.

Example

-- Create a table with a clustered index on the ID column |

In this example, the ProductID column is the clustered index. The rows in the Products table will be physically sorted by the ProductID value.

2. Non - Clustered Index

Definition

A non - clustered index is a separate structure from the actual data rows. It contains a copy of the indexed columns and a pointer to the location of the corresponding data row in the table. A table can have multiple non - clustered indexes.

How it Works

- Index Structure: Similar to a clustered index, a non - clustered index is also typically implemented as a B - tree. However, the leaf nodes of the non - clustered index do not contain the actual data rows but rather pointers to the data rows in the table.

- Data Retrieval: When you query data using the columns in a non - clustered index, the database first searches the non - clustered index to find the pointers to the relevant data rows. Then it uses these pointers to access the actual data rows in the table. This additional step of accessing the data rows can make non - clustered index lookups slightly slower than clustered index lookups for large datasets.

Example

-- Create a table |

In this example, the idx_CustomerID is a non - clustered index on the CustomerID column. The index stores the CustomerID values and pointers to the corresponding rows in the Orders table.

3. Differences between Clustered and Non - Clustered Indexes

Physical Order of Data

- Clustered Index: Determines the physical order of data storage in the table. The data rows are physically sorted according to the clustered index key.

- Non - Clustered Index: Does not affect the physical order of data in the table. It is a separate structure that points to the data rows.

Number of Indexes per Table

- Clustered Index: A table can have only one clustered index because there can be only one physical ordering of the data.

- Non - Clustered Index: A table can have multiple non - clustered indexes. You can create non - clustered indexes on different columns or combinations of columns to improve query performance for various types of queries.

Storage Space

- Clustered Index: Since it stores the actual data rows, it generally requires more storage space compared to a non - clustered index.

- Non - Clustered Index: Stores only the indexed columns and pointers to the data rows, so it usually requires less storage space.

Query Performance

- Clustered Index: Is very efficient for range queries (e.g., retrieving all rows where the index value is between a certain range) because the data is physically sorted. It also has an advantage for queries that return a large number of rows.

- Non - Clustered Index: Is useful for queries that filter on a small subset of data using the indexed columns. However, for queries that need to access a large number of rows, the additional step of following the pointers to the data rows can make it slower than using a clustered index.

Insert, Update, and Delete Operations

- Clustered Index: Inserting, updating, or deleting rows can be more expensive because it may require re - arranging the physical order of the data on disk.

- Non - Clustered Index: These operations are generally less expensive because they only involve updating the non - clustered index structure and the pointers, without affecting the physical order of the data.

What are normal forms

In the context of databases, “NF” usually stands for “Normal Form”. Normal forms are used in database design to organize data in a way that reduces data redundancy, improves data integrity, and makes the database more efficient and easier to manage. Some of the commonly known normal forms are:

- First Normal Form (1NF): A relation is in 1NF if it has atomic values, meaning that each cell in the table contains only a single value and not a set of values. For example, a table where a column stores multiple phone numbers separated by commas would not be in 1NF.

- Second Normal Form (2NF): A relation is in 2NF if it is in 1NF and all non-key attributes are fully functionally dependent on the primary key. This means that no non-key attribute should depend only on a part of the primary key in case of a composite primary key.

- Third Normal Form (3NF): A relation is in 3NF if it is in 2NF and there is no transitive dependency of non-key attributes on the primary key. That is, a non-key attribute should not depend on another non-key attribute.

- Boyce-Codd Normal Form (BCNF): BCNF is a stronger version of 3NF. A relation is in BCNF if for every functional dependency X → Y, X is a superkey. In other words, every determinant must be a candidate key.

- Fourth Normal Form (4NF): A relation is in 4NF if it is in BCNF and there are no non-trivial multivalued dependencies.

1. Examples of Normalization

First Normal Form (1NF)

Original Table (Not in 1NF):

Suppose we have a Students table that stores information about students and their hobbies.

| Student ID | Student Name | Hobbies |

|---|---|---|

| 1 | John | Reading, Painting |

| 2 | Jane | Singing, Dancing |

The Hobbies column contains multiple values separated by commas, which violates 1NF.

Converted to 1NF:

We create a new table structure.

Students Table:

| Student ID | Student Name |

|---|---|

| 1 | John |

| 2 | Jane |

StudentHobbies Table:

| Student ID | Hobby |

|---|---|

| 1 | Reading |

| 1 | Painting |

| 2 | Singing |

| 2 | Dancing |

Second Normal Form (2NF)

Original Table (Violating 2NF):

Consider an Orders table with a composite primary key (Order ID, Product ID).

| Order ID | Product ID | Product Name | Order Quantity |

|---|---|---|---|

| 1 | 101 | Laptop | 2 |

| 1 | 102 | Mouse | 3 |

| 2 | 101 | Laptop | 1 |

The Product Name depends only on the Product ID (part of the composite primary key), violating 2NF.

Converted to 2NF:

Products Table:

| Product ID | Product Name |

|---|---|

| 101 | Laptop |

| 102 | Mouse |

OrderDetails Table:

| Order ID | Product ID | Order Quantity |

|---|---|---|

| 1 | 101 | 2 |

| 1 | 102 | 3 |

| 2 | 101 | 1 |

Third Normal Form (3NF)

Original Table (Violating 3NF):

Let’s have an Employees table.

| Employee ID | Department ID | Department Name | Employee Salary |

|---|---|---|---|

| 1 | 1 | IT | 5000 |

| 2 | 1 | IT | 6000 |

| 3 | 2 | HR | 4500 |

The Department Name is transitively dependent on the Employee ID through the Department ID, violating 3NF.

Converted to 3NF:

Departments Table:

| Department ID | Department Name |

|---|---|

| 1 | IT |

| 2 | HR |

Employees Table:

| Employee ID | Department ID | Employee Salary |

|---|---|---|

| 1 | 1 | 5000 |

| 2 | 1 | 6000 |

| 3 | 2 | 4500 |

2. Examples of Database Integrity

Entity Integrity

- Explanation: Ensures that each row in a table is uniquely identifiable, usually through a primary key.

- Example: In a

Customerstable, theCustomer IDis set as the primary key.CREATE TABLE Customers (

Customer ID INT PRIMARY KEY,

Customer Name VARCHAR(100),

Email VARCHAR(100)

);

If you try to insert a new row with an existing Customer ID, the database will reject the insert operation because it violates entity integrity.

Referential Integrity

- Explanation: Maintains the consistency between related tables. A foreign key in one table must match a primary key value in another table.

- Example: Consider a

Orderstable and aCustomerstable. TheOrderstable has a foreign keyCustomer IDthat references theCustomer IDin theCustomerstable.CREATE TABLE Customers (

Customer ID INT PRIMARY KEY,

Customer Name VARCHAR(100)

);

CREATE TABLE Orders (

Order ID INT PRIMARY KEY,

Customer ID INT,

Order Date DATE,

FOREIGN KEY (Customer ID) REFERENCES Customers(Customer ID)

);

If you try to insert an order with a Customer ID that does not exist in the Customers table, the database will not allow it due to referential integrity.

Domain Integrity

- Explanation: Ensures that the data entered into a column falls within an acceptable range of values.

- Example: In a

Productstable, thePricecolumn should only accept positive values.CREATE TABLE Products (

Product ID INT PRIMARY KEY,

Product Name VARCHAR(100),

Price DECIMAL(10, 2) CHECK (Price > 0)

);

If you try to insert a product with a negative price, the database will reject the insert because it violates domain integrity.

How do you represent a multi-valued attribute in a database?

A multi - valued attribute is an attribute that can have multiple values for a single entity. Here are the common ways to represent multi - valued attributes in different types of databases:

Relational Databases

1. Using a Separate Table (Normalization Approach)

This is the most common and recommended method in relational databases as it adheres to the principles of database normalization.

Steps:

- Identify the Entities and Attributes: Suppose you have an

Employeesentity with a multi - valued attributeSkills. An employee can have multiple skills, so theSkillsattribute is multi - valued. - Create a New Table: Create a new table to store the multi - valued data. This table will have a foreign key that references the primary key of the main entity table.

Define the Schema:

-- Create the Employees table

CREATE TABLE Employees (

employee_id INT PRIMARY KEY AUTO_INCREMENT,

employee_name VARCHAR(100)

);

-- Create the Skills table

CREATE TABLE Skills (

skill_id INT PRIMARY KEY AUTO_INCREMENT,

employee_id INT,

skill_name VARCHAR(50),

FOREIGN KEY (employee_id) REFERENCES Employees(employee_id)

);Insert and Query Data:

-- Insert an employee

INSERT INTO Employees (employee_name) VALUES ('John Doe');

-- Insert skills for the employee

INSERT INTO Skills (employee_id, skill_name) VALUES (1, 'Java');

INSERT INTO Skills (employee_id, skill_name) VALUES (1, 'Python');

-- Query all skills of an employee

SELECT skill_name

FROM Skills

WHERE employee_id = 1;

2. Using Delimited Lists (Denormalization Approach)

In some cases, for simplicity or performance reasons, you may choose to use delimited lists to represent multi - valued attributes.

Steps:

Modify the Main Table: Instead of creating a separate table, you add a single column to the main table and store multiple values separated by a delimiter (e.g., comma).

-- Create the Employees table with a multi - valued attribute as a delimited list

CREATE TABLE Employees (

employee_id INT PRIMARY KEY AUTO_INCREMENT,

employee_name VARCHAR(100),

skills VARCHAR(200)

);Insert and Query Data:

-- Insert an employee with skills

INSERT INTO Employees (employee_name, skills) VALUES ('John Doe', 'Java,Python');

-- Query employees with a specific skill

SELECT *

FROM Employees

WHERE skills LIKE '%Java%';

However, this approach has several drawbacks. It violates the first normal form of database normalization, making it difficult to perform data manipulation and queries, and it can lead to data integrity issues.

Non - Relational Databases

1. Document Databases (e.g., MongoDB)

In document databases, multi - valued attributes can be easily represented as arrays within a document.

Steps:

Define the Document Structure: Create a collection and define the document structure to include an array for the multi - valued attribute.

// Insert a document in the Employees collection

db.employees.insertOne({

employee_name: 'John Doe',

skills: ['Java', 'Python']

});Query Data:

// Query employees with a specific skill

db.employees.find({ skills: 'Java' });

2. Graph Databases (e.g., Neo4j)

In graph databases, multi - valued attributes can be represented as relationships between nodes.

Steps:

Create Nodes and Relationships: Create nodes for the main entity and the values of the multi - valued attribute, and then create relationships between them.

// Create an employee node

CREATE (:Employee {name: 'John Doe'})

// Create skill nodes

CREATE (:Skill {name: 'Java'})

CREATE (:Skill {name: 'Python'})

// Create relationships between the employee and skills

MATCH (e:Employee {name: 'John Doe'}), (s1:Skill {name: 'Java'}), (s2:Skill {name: 'Python'})

CREATE (e)-[:HAS_SKILL]->(s1)

CREATE (e)-[:HAS_SKILL]->(s2);Query Data:

// Query all skills of an employee

MATCH (e:Employee {name: 'John Doe'})-[:HAS_SKILL]->(s:Skill)

RETURN s.name;

How do you represent a many-to-many relationship in database?

Here are the common ways to represent a many - to - many relationship in a database:

1. Using a Junction Table (Associative Table)

This is the most prevalent method in relational databases.

Step 1: Identify the related tables

Suppose you have two entities that have a many - to - many relationship. For example, in a school database, “Students” and “Courses”. A student can enroll in multiple courses, and a course can have multiple students.

Step 2: Create the junction table

The junction table contains at least two foreign keys, each referencing the primary key of one of the related tables.

- Table creation in SQL (for MySQL):

-- Create the Students table

CREATE TABLE Students (

student_id INT PRIMARY KEY AUTO_INCREMENT,

student_name VARCHAR(100)

);

-- Create the Courses table

CREATE TABLE Courses (

course_id INT PRIMARY KEY AUTO_INCREMENT,

course_name VARCHAR(100)

);

-- Create the junction table (Enrollments)

CREATE TABLE Enrollments (

student_id INT,

course_id INT,

PRIMARY KEY (student_id, course_id),

FOREIGN KEY (student_id) REFERENCES Students(student_id),

FOREIGN KEY (course_id) REFERENCES Courses(course_id)

);

In this example, the Enrollments table is the junction table. The combination of student_id and course_id forms a composite primary key, which ensures that each enrollment (a relationship between a student and a course) is unique.

Step 3: Insert and query data

Inserting data:

-- Insert a student

INSERT INTO Students (student_name) VALUES ('John Doe');

-- Insert a course

INSERT INTO Courses (course_name) VALUES ('Mathematics');

-- Record the enrollment

INSERT INTO Enrollments (student_id, course_id) VALUES (1, 1);Querying data: To find all courses a student is enrolled in, or all students enrolled in a course, you can use JOIN operations.

-- Find all courses John Doe is enrolled in

SELECT Courses.course_name

FROM Students

JOIN Enrollments ON Students.student_id = Enrollments.student_id

JOIN Courses ON Enrollments.course_id = Courses.course_id

WHERE Students.student_name = 'John Doe';

2. In Non - Relational Databases

Graph Databases

- In graph databases like Neo4j, a many - to - many relationship is represented by nodes and relationships. Each entity is a node, and the relationship between them is an edge.

- For example, you can create

Studentnodes andCoursenodes. Then, you can create aENROLLED_INrelationship between theStudentandCoursenodes.// Create a student node

CREATE (:Student {name: 'John Doe'})

// Create a course node

CREATE (:Course {name: 'Mathematics'})

// Create the enrollment relationship

MATCH (s:Student {name: 'John Doe'}), (c:Course {name: 'Mathematics'})

CREATE (s)-[:ENROLLED_IN]->(c);

Document Databases

- In document databases such as MongoDB, you can use arrays to represent many - to - many relationships in a denormalized way. For example, in the

studentscollection, each student document can have an array of course IDs, and in thecoursescollection, each course document can have an array of student IDs. However, this approach can lead to data duplication and potential consistency issues.// Insert a student document

db.students.insertOne({

name: 'John Doe',

courses: [ObjectId("1234567890abcdef12345678"), ObjectId("234567890abcdef12345678")]

});

// Insert a course document

db.courses.insertOne({

name: 'Mathematics',

students: [ObjectId("abcdef1234567890abcdef12"), ObjectId("bcdef1234567890abcdef12")]

});

TRANSACTION JPA

- What is “Offline Transaction”?

- How do we usually perform Transaction Management in JDBC?

- What is Database Transaction?

- What are entity states defined in Hibernate / JPA?

- How can we transfer the entity between different states?

- What are differences between save, persist?

- What are differences between update, merge and saveOrUpdate?

- How do you use elasticSearch in your java application

- @Transactional - atomic operation

The@Transactionalannotation in Spring JPA is used to mark a method or a class as a transactional operation. It ensures that the operations within the method are executed atomically. That is, either all the operations succeed and are committed to the database, or if an error occurs, all the operations are rolled back, maintaining data consistency. - Propagation, Isolation

Transaction propagation defines how a transaction should behave when a transactional method calls another transactional method. There are several propagation types likeREQUIRED,REQUIRES_NEW,SUPPORTS, etc. Isolation levels define the degree to which one transaction is isolated from other transactions. Common isolation levels areREAD_UNCOMMITTED,READ_COMMITTED,REPEATABLE_READ, andSERIALIZABLE. Each level has different trade-offs in terms of data consistency and concurrency. - JPA naming convention

JPA has certain naming conventions for mapping entity classes to database tables and columns. By default, it uses a naming strategy where the entity class name is mapped to the table name, and the property names are mapped to column names. However, you can also customize the naming using annotations like@Tableand@Columnto specify different names if needed. - Paging and Sorting Using JPA

JPA provides support for paging and sorting data. You can use thePageableinterface and related classes to specify the page number, page size, and sorting criteria. For example, you can use methods likefindAll(Pageable pageable)in a JPA repository to retrieve a paginated and sorted list of entities. - Hibernate Persistence Context

The Hibernate persistence context is a set of managed entities that are associated with a particular session. It tracks the state of the entities and is responsible for synchronizing the changes between the entities and the database. It manages the lifecycle of the entities, including loading, saving, and deleting them.

how does jdbc handle database connections

JDBC (Java Database Connectivity) is a Java API that lets Java programs connect to and interact with databases.It provides a standard way to send SQL queries, retrieve data, update records, and manage database connections.

JDBC hides the details of how different databases work, so your Java code doesn’t need to change much if you switch databases.Under the hood, JDBC uses drivers (small libraries) provided by database vendors to handle the communication.

Typical steps include loading the driver, opening a connection, running SQL commands, handling results, and closing the connection.In real-world apps, JDBC is the foundation for higher-level tools like Hibernate, MyBatis, and Spring Data.

- JDBC, statement vs PreparedStatement, Datasource

- JDBC (Java Database Connectivity) is an API for interacting with databases in Java.

Statementis used to execute SQL statements directly, but it is vulnerable to SQL injection attacks.PreparedStatementis a more secure and efficient alternative. It allows you to precompile SQL statements and set parameters, preventing SQL injection.- A

DataSourceis a factory for connections to a database. It manages the connection pool and provides connections to the application.

- Hibernate ORM, Session, Cache

Hibernate ORM is an Object Relational Mapping framework that allows you to map Java objects to database tables. ASessionin Hibernate is a lightweight, short-lived object that provides an interface to interact with the database. It is used to perform operations like saving, loading, and deleting objects. Hibernate also has a caching mechanism to improve performance. It can cache objects in memory to reduce database access. There are different levels of caches, such as the first-level cache (session-level cache) and the second-level cache (shared cache across sessions). - Optimistic Locking - add version column

Optimistic locking is a concurrency control mechanism used in databases. In the context of Hibernate, it can be implemented by adding a version column to the database table. When an object is loaded, the version number is also loaded. When the object is updated, Hibernate checks if the version number has changed. If it has, it means the object has been modified by another transaction, and the update will fail, preventing data conflicts. - Association: many - to - many

In object-relational mapping, a many-to-many association is used when multiple objects of one entity can be related to multiple objects of another entity. For example, in a system with users and roles, a user can have multiple roles, and a role can be assigned to multiple users. In Hibernate, this is usually mapped using a join table and appropriate annotations like@ManyToManyand@JoinTable.

1. What is “Offline Transaction”?

An offline transaction in the context of databases is a set of operations on data that occur without an immediate, real - time connection to the database server. The operations are carried out on a local copy of the data, and the changes are later synchronized with the main database.

Example:

- Mobile Banking App: A user opens a mobile banking app on their smartphone while on an airplane (no internet connection). They can view their account balance, transaction history which is stored locally. They can also initiate a new fund transfer. The app records this transfer request in a local database on the phone. Once the plane lands and the phone connects to the internet, the app synchronizes with the bank’s central database, uploading the new transfer request and downloading any new account updates.

- Field Salesperson: A salesperson visits clients in an area with poor network coverage. Using a tablet, they access a local copy of the customer database. They add new customer details and record sales orders. Later, when they get back to an area with a network, the tablet syncs the new data with the company’s central database.

2. How do we usually perform Transaction Management in JDBC?

In JDBC (Java Database Connectivity), transaction management involves the following steps:

Step 1: Disable Auto - Commit Mode

By default, JDBC operates in auto - commit mode where each SQL statement is treated as a separate transaction. To group multiple statements into a single transaction, we need to disable auto - commit.import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

public class JDBCTransactionExample {

public static void main(String[] args) {

Connection connection = null;

try {

// Establish a connection

connection = DriverManager.getConnection("jdbc:mysql://localhost:3306/mydb", "user", "password");

// Disable auto - commit

connection.setAutoCommit(false);

Statement statement = connection.createStatement();

// Execute SQL statements

statement.executeUpdate("INSERT INTO employees (name, salary) VALUES ('John', 5000)");

statement.executeUpdate("UPDATE departments SET budget = budget - 5000 WHERE dept_name = 'IT'");

// Commit the transaction

connection.commit();

} catch (SQLException e) {

try {

if (connection != null) {

// Rollback the transaction in case of an error

connection.rollback();

}

} catch (SQLException ex) {

ex.printStackTrace();

}

e.printStackTrace();

} finally {

try {

if (connection != null) {

connection.close();

}

} catch (SQLException e) {

e.printStackTrace();

}

}

}

}

Explanation:

connection.setAutoCommit(false): Disables auto - commit mode so that statements are grouped into a single transaction.connection.commit(): Commits all the statements in the transaction if everything goes well.connection.rollback(): Rolls back all the statements in the transaction if an error occurs.

3. What is Database Transaction?

A database transaction is a sequence of one or more SQL statements that are treated as a single unit of work. It must satisfy the ACID properties:

- Atomicity: Either all the statements in the transaction are executed successfully, or none of them are. For example, in a bank transfer, if you transfer money from one account to another, either both the debit from the source account and the credit to the destination account happen, or neither does.

- Consistency: The transaction takes the database from one consistent state to another. For instance, if a rule in the database states that the total balance of all accounts should always be the same, a transaction should maintain this consistency.

- Isolation: Transactions are isolated from each other. One transaction should not be affected by the intermediate states of other concurrent transactions. For example, if two users are trying to transfer money at the same time, their transactions should not interfere with each other.

- Durability: Once a transaction is committed, its changes are permanent and will survive any subsequent system failures.

4. What are entity states defined in Hibernate / JPA?

In Hibernate and JPA (Java Persistence API), entities can be in one of the following states:

Transient: An entity is transient when it is created using the

newkeyword and has not been associated with a persistence context. It has no corresponding row in the database.// Transient entity

Employee employee = new Employee();

employee.setName("Jane");Persistent: A persistent entity is associated with a persistence context and has a corresponding row in the database. Any changes made to a persistent entity will be automatically synchronized with the database when the transaction is committed.

EntityManager entityManager = entityManagerFactory.createEntityManager();

entityManager.getTransaction().begin();

Employee employee = entityManager.find(Employee.class, 1L);

// Now the employee is in persistent stateDetached: A detached entity was once persistent but is no longer associated with a persistence context. It still has a corresponding row in the database, but changes made to it will not be automatically synchronized.

entityManager.getTransaction().commit();

entityManager.close();

// Now the employee is in detached stateRemoved: An entity is in the removed state when it has been marked for deletion from the database. Once the transaction is committed, the corresponding row in the database will be deleted.

entityManager.getTransaction().begin();

Employee employee = entityManager.find(Employee.class, 1L);

entityManager.remove(employee);

// Now the employee is in removed state

5. How can we transfer the entity between different states?

Transient to Persistent: Use methods like

persist()orsave()in Hibernate. In JPA, you can useEntityManager.persist().EntityManager entityManager = entityManagerFactory.createEntityManager();

entityManager.getTransaction().begin();

Employee employee = new Employee();

employee.setName("Tom");

entityManager.persist(employee);

// Now the employee is in persistent statePersistent to Detached: Closing the

EntityManageror clearing the persistence context will make a persistent entity detached.entityManager.getTransaction().commit();

entityManager.close();

// The previously persistent entity is now detachedDetached to Persistent: Use the

merge()method in JPA.EntityManager newEntityManager = entityManagerFactory.createEntityManager();

newEntityManager.getTransaction().begin();

Employee detachedEmployee = getDetachedEmployee();

Employee persistentEmployee = newEntityManager.merge(detachedEmployee);

// Now the entity is back in persistent statePersistent/Detached to Removed: Use the

remove()method in JPA.entityManager.getTransaction().begin();

Employee employee = entityManager.find(Employee.class, 1L);

entityManager.remove(employee);

// Now the employee is in removed state

6. What are differences between save, persist?

save()(Hibernate - specific):- Returns the generated identifier immediately. It can be used to insert a new entity into the database. If the entity is already persistent, it may throw an exception.

Session session = sessionFactory.openSession();

Transaction transaction = session.beginTransaction();

Employee employee = new Employee();

employee.setName("Alice");

Serializable id = session.save(employee);

transaction.commit();

session.close();

- Returns the generated identifier immediately. It can be used to insert a new entity into the database. If the entity is already persistent, it may throw an exception.

persist()(JPA - standard):- Does not guarantee that the identifier will be assigned immediately. It is used to make a transient entity persistent. If the entity is already persistent, it will have no effect.

EntityManager entityManager = entityManagerFactory.createEntityManager();

entityManager.getTransaction().begin();

Employee employee = new Employee();

employee.setName("Bob");