原文

原文标题 : Source Multiplayer Networking

Multiplayer games based on the Source Engine use a Client-Server networking architecture. Usually a server is a dedicated host that runs the game and is authoritative about world simulation, game rules, and player input processing. A client is a player’s computer connected to a game server. The client and server communicate with each other by sending small data packets at a high frequency (usually 20 to 30 packets per second). A client receives the current world state from the server and generates video and audio output based on these updates. The client also samples data from input devices (keyboard, mouse, microphone, etc.) and sends these input samples back to the server for further processing. Clients only communicate with the game server and not between each other (like in a peer-to-peer application). In contrast with a single player game, a multiplayer game has to deal with a variety of new problems caused by packet-based communication.

Network bandwidth is limited, so the server can’t send a new update packet to all clients for every single world change. Instead, the server takes snapshots of the current world state at a constant rate and broadcasts these snapshots to the clients. Network packets take a certain amount of time to travel between the client and the server (i.e. half the ping time). This means that the client time is always a little bit behind the server time. Furthermore, client input packets are also delayed on their way back, so the server is processing temporally delayed user commands. In addition, each client has a different network delay which varies over time due to other background traffic and the client’s framerate. These time differences between server and client causes logical problems, becoming worse with increasing network latencies. In fast-paced action games, even a delay of a few milliseconds can cause a laggy gameplay feeling and make it hard to hit other players or interact with moving objects. Besides bandwidth limitations and network latencies, information can get lost due to network packet loss.

To cope with the issues introduced by network communication, the Source engine server employs techniques such as data compression and lag compensation which are invisible to the client. The client then performs prediction and interpolation to further improve the experience.

Basic networking

The server simulates the game in discrete time steps called ticks. By default, the timestep is 15ms, so 66.666… ticks per second are simulated, but mods can specify their own tickrate. During each tick, the server processes incoming user commands, runs a physical simulation step, checks the game rules, and updates all object states. After simulating a tick, the server decides if any client needs a world update and takes a snapshot of the current world state if necessary. A higher tickrate increases the simulation precision, but also requires more CPU power and available bandwidth on both server and client. The server admin may override the default tickrate with the -tickrate command line parameter, though tickrate changes done this way are not recommended because the mod may not work as designed if its tickrate is changed.

-tickrate command line parameter is not available on CSS, DoD S, TF2, L4D and L4D2 because changing tickrate causes server timing issues. The tickrate is set to 66 in CSS, DoD S and TF2, and 30 in L4D and L4D2.Clients usually have only a limited amount of available bandwidth. In the worst case, players with a modem connection can’t receive more than 5 to 7 KB/sec. If the server tried to send them updates with a higher data rate, packet loss would be unavoidable. Therefore, the client has to tell the server its incoming bandwidth capacity by setting the console variable rate (in bytes/second). This is the most important network variable for clients and it has to be set correctly for an optimal gameplay experience. The client can request a certain snapshot rate by changing cl_updaterate (default 20), but the server will never send more updates than simulated ticks or exceed the requested client rate limit. Server admins can limit data rate values requested by clients with sv_minrate and sv_maxrate (both in bytes/second). Also the snapshot rate can be restricted with sv_minupdaterate and sv_maxupdaterate (both in snapshots/second).

The client creates user commands from sampling input devices with the same tick rate that the server is running with. A user command is basically a snapshot of the current keyboard and mouse state. But instead of sending a new packet to the server for each user command, the client sends command packets at a certain rate of packets per second (usually 30). This means two or more user commands are transmitted within the same packet. Clients can increase the command rate with cl_cmdrate. This will increase responsiveness but requires more outgoing bandwidth, too.

Game data is compressed using delta compression to reduce network load. That means the server doesn’t send a full world snapshot each time, but rather only changes (a delta snapshot) that happened since the last acknowledged update. With each packet sent between the client and server, acknowledge numbers are attached to keep track of their data flow. Usually full (non-delta) snapshots are only sent when a game starts or a client suffers from heavy packet loss for a couple of seconds. Clients can request a full snapshot manually with the cl_fullupdate command.

Responsiveness, or the time between user input and its visible feedback in the game world, are determined by lots of factors, including the server/client CPU load, simulation tickrate, data rate and snapshot update settings, but mostly by the network packet traveling time. The time between the client sending a user command, the server responding to it, and the client receiving the server’s response is called the latency or ping (or round trip time). Low latency is a significant advantage when playing a multiplayer online game. Techniques like prediction and lag compensation try to minimize that advantage and allow a fair game for players with slower connections. Tweaking networking setting can help to gain a better experience if the necessary bandwidth and CPU power is available. We recommend keeping the default settings, since improper changes may cause more negative side effects than actual benefits.

Servers that Support Tickrate

The tickrate can be altered by using the -tickrate parameter

- Counter Strike: Global Offensive

- Half-Life 2: Deathmatch

The following servers tickrate cannot be altered as changing this causes server timing issues.

Tickrate 66

- Counter Strike: Source

- Day of Defeat: Source

- Team Fortress 2

Tickrate 30

- Left 4 Dead

- Left 4 Dead 2

Entity interpolation

By default, the client receives about 20 snapshot per second. If the objects (entities) in the world were only rendered at the positions received by the server, moving objects and animation would look choppy and jittery. Dropped packets would also cause noticeable glitches. The trick to solve this problem is to go back in time for rendering, so positions and animations can be continuously interpolated between two recently received snapshots. With 20 snapshots per second, a new update arrives about every 50 milliseconds. If the client render time is shifted back by 50 milliseconds, entities can be always interpolated between the last received snapshot and the snapshot before that.

Source defaults to an interpolation period (‘lerp’) of 100-milliseconds (cl_interp 0.1); this way, even if one snapshot is lost, there are always two valid snapshots to interpolate between. Take a look at the following figure showing the arrival times of incoming world snapshots:

The last snapshot received on the client was at tick 344 or 10.30 seconds. The client time continues to increase based on this snapshot and the client frame rate. If a new video frame is rendered, the rendering time is the current client time 10.32 minus the view interpolation delay of 0.1 seconds. This would be 10.22 in our example and all entities and their animations are interpolated using the correct fraction between snapshot 340 and 342.

Since we have an interpolation delay of 100 milliseconds, the interpolation would even work if snapshot 342 were missing due to packet loss. Then the interpolation could use snapshots 340 and 344. If more than one snapshot in a row is dropped, interpolation can’t work perfectly because it runs out of snapshots in the history buffer. In that case the renderer uses extrapolation (cl_extrapolate 1) and tries a simple linear extrapolation of entities based on their known history so far. The extrapolation is done only for 0.25 seconds of packet loss (cl_extrapolate_amount), since the prediction errors would become too big after that.

Entity interpolation causes a constant view “lag” of 100 milliseconds by default (cl_interp 0.1), even if you’re playing on a listenserver (server and client on the same machine). This doesn’t mean you have to lead your aiming when shooting at other players since the server-side lag compensation knows about client entity interpolation and corrects this error.

cl_interp_ratio cvar. With this you can easily and safely decrease the interpolation period by setting cl_interp to 0, then increasing the value of cl_updaterate (the useful limit of which depends on server tickrate). You can check your final lerp with net_graph 1.sv_showhitboxes (not available in Source 2009) you will see player hitboxes drawn in server time, meaning they are ahead of the rendered player model by the lerp period. This is perfectly normal!Input prediction

Lets assume a player has a network latency of 150 milliseconds and starts to move forward. The information that the +FORWARD key is pressed is stored in a user command and send to the server. There the user command is processed by the movement code and the player’s character is moved forward in the game world. This world state change is transmitted to all clients with the next snapshot update. So the player would see his own change of movement with a 150 milliseconds delay after he started walking. This delay applies to all players actions like movement, shooting weapons, etc. and becomes worse with higher latencies.

A delay between player input and corresponding visual feedback creates a strange, unnatural feeling and makes it hard to move or aim precisely. Client-side input prediction (cl_predict 1) is a way to remove this delay and let the player’s actions feel more instant. Instead of waiting for the server to update your own position, the local client just predicts the results of its own user commands. Therefore, the client runs exactly the same code and rules the server will use to process the user commands. After the prediction is finished, the local player will move instantly to the new location while the server still sees him at the old place.

After 150 milliseconds, the client will receive the server snapshot that contains the changes based on the user command he predicted earlier. Then the client compares the server position with his predicted position. If they are different, a prediction error has occurred. This indicates that the client didn’t have the correct information about other entities and the environment when it processed the user command. Then the client has to correct its own position, since the server has final authority over client-side prediction. If cl_showerror 1 is turned on, clients can see when prediction errors happen. Prediction error correction can be quite noticeable and may cause the client’s view to jump erratically. By gradually correcting this error over a short amount of time (cl_smoothtime), errors can be smoothly corrected. Prediction error smoothing can be turned off with cl_smooth 0.

Prediction is only possible for the local player and entities affected only by him, since prediction works by using the client’s keypresses to make a “best guess” of where the player will end up. Predicting other players would require literally predicting the future with no data, since there’s no way to instantaneously get keypresses from them.

Lag compensation

- All source code for lag compensation and view interpolation is available in the Source SDK. See

for implementation details.

Let’s say a player shoots at a target at client time 10.5. The firing information is packed into a user command and sent to the server. While the packet is on its way through the network, the server continues to simulate the world, and the target might have moved to a different position. The user command arrives at server time 10.6 and the server wouldn’t detect the hit, even though the player has aimed exactly at the target. This error is corrected by the server-side lag compensation.

The lag compensation system keeps a history of all recent player positions for one second. If a user command is executed, the server estimates at what time the command was created as follows:

Command Execution Time = Current Server Time - Packet Latency - Client View Interpolation

Then the server moves all other players - only players - back to where they were at the command execution time. The user command is executed and the hit is detected correctly. After the user command has been processed, the players revert to their original positions.

On a listen server you can enable sv_showimpacts 1 to see the different server and client hitboxes:

This screenshot was taken on a listen server with 200 milliseconds of lag (using net_fakelag), right after the server confirmed the hit. The red hitbox shows the target position on the client where it was 100ms + interp period ago. Since then, the target continued to move to the left while the user command was travelling to the server. After the user command arrived, the server restored the target position (blue hitbox) based on the estimated command execution time. The server traces the shot and confirms the hit (the client sees blood effects).

Client and server hitboxes don’t exactly match because of small precision errors in time measurement. Even a small difference of a few milliseconds can cause an error of several inches for fast-moving objects. Multiplayer hit detection is not pixel perfect and has known precision limitations based on the tickrate and the speed of moving objects.

The question arises, why is hit detection so complicated on the server? Doing the back tracking of player positions and dealing with precision errors while hit detection could be done client-side way easier and with pixel precision. The client would just tell the server with a “hit” message what player has been hit and where. We can’t allow that simply because a game server can’t trust the clients on such important decisions. Even if the client is “clean” and protected by Valve Anti-Cheat, the packets could be still modified on a 3rd machine while routed to the game server. These “cheat proxies” could inject “hit” messages into the network packet without being detected by VAC (a “man-in-the-middle” attack).

Network latencies and lag compensation can create paradoxes that seem illogical compared to the real world. For example, you can be hit by an attacker you can’t even see anymore because you already took cover. What happened is that the server moved your player hitboxes back in time, where you were still exposed to your attacker. This inconsistency problem can’t be solved in general because of the relatively slow packet speeds. In the real world, you don’t notice this problem because light (the packets) travels so fast and you and everybody around you sees the same world as it is right now.

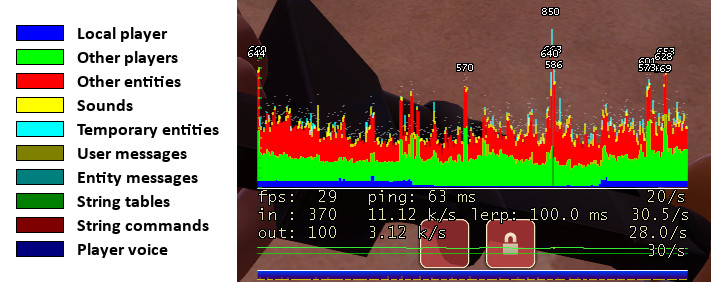

Net graph

The Source engine offers a couple of tools to check your client connection speed and quality. The most popular one is the net graph, which can be enabled with net_graph 2 (or +graph). Incoming packets are represented by small lines moving from right to left. The height of each line reflects size of a packet. If a gap appears between lines, a packet was lost or arrived out of order. The lines are color-coded depending on what kind of data they contain.

Under the net graph, the first line shows your current rendered frames per second, your average latency, and the current value of cl_updaterate. The second line shows the size in bytes of the last incoming packet (snapshots), the average incoming bandwidth, and received packets per second. The third line shows the same data just for outgoing packets (user commands).

Optimizations

The default networking settings are designed for playing on dedicated server on the Internet. The settings are balanced to work well for most client/server hardware and network configurations. For Internet games the only console variable that should be adjusted on the client is “rate”, which defines your available bytes/second bandwidth of your network connection. Good values for “rate” is 4500 for modems, 6000 for ISDN, 10000 DSL and above.

In an high-performance network environment, where the server and all clients have the necessary hardware resources available, it’s possible to tweak bandwidth and tickrate settings to gain more gameplay precision. Increasing the server tickrate generally improves movement and shooting precision but comes with a higher CPU cost. A Source server running with tickrate 100 generates about 1.5x more CPU load than a default tickrate 66. That can cause serious calculation lags, especially when lots of people are shooting at the same time. It’s not suggested to run a game server with a higher tickrate than 66 to reserve necessary CPU resources for critical situations.

If the game server is running with a higher tickrate, clients can increase their snapshot update rate (cl_updaterate) and user command rate (cl_cmdrate), if the necessary bandwidth (rate) is available. The snapshot update rate is limited by the server tickrate, a server can’t send more then one update per tick. So for a tickrate 66 server, the highest client value for cl_updaterate would be 66. If you increase the snapshot rate and encounter packet loss or choke, you have to turn it down again. With an increased cl_updaterate you can also lower the view interpolation delay (cl_interp). The default interpolation delay is 0.1 seconds, which derives from the default cl_updaterate 20. View interpolation delay gives a moving player a small advantage over a stationary player since the moving player can see his target a split second earlier. This effect is unavoidable, but it can be reduced by decreasing the view interpolation delay. If both players are moving, the view lag delay is affecting both players and nobody has an advantage.

This is the relation between snapshot rate and view interpolation delay is the following:

interpolation period = max( cl_interp, cl_interp_ratio / cl_updaterate )

“Max(x,y)” means “whichever of these is higher”. You can set cl_interp to 0 and still have a safe amount of interp. You can then increase cl_updaterate to decrease your interp period further, but don’t exceed tickrate (66) or flood your connection with more data than it can handle.

Tips

- Don’t change console settings unless you are 100% sure what you are doing

- Don’t turn off view interpolation and/or lag compensation

- Optimized setting for one client may not work for other clients

- If you follow a player in “First-Person” as a spectator in a game or SourceTV, you don’t exactly see what the player sees

Most "high-performance" setting cause exactly the opposite effect, if the server or network can't handle the load.It will not improve movement or shooting precision.Do not just use settings from other clients without verifing them for your system.Spectators see the game world without lag compensation.译文

译文出处

Source引擎的多人游戏使用基于UDP通信的C/S架构。游戏以服务器逻辑作为世界权威,客户端和服务器通过UDP协议(20~30packet/s)通信。客户端从服务器接收信息并基于当前世界状态渲染画面和输出音频。客户端以固定频率发送操作输入到服务器。客户端仅与游戏服务器,而不是彼此之间通信。多人游戏必须处理基于网络消息同步所带来的一系列问题。

网络的带宽是有限的,所以服务器不能为每一个世界的变化发送新的更新数据包发送到所有客户端。相反,服务器以固定的频率取当前世界状态的快照并广播这些快照到客户端。网络数据包需要一定的时间量的客户端和服务器(RTT的一半)来往。这意味着客户端时间相对服务器时间总是稍有滞后。此外,客户端输入数据包同步到服务器也有一定网络传输时间,所以服务器处理客户端输入也存在延迟的。不同的客户端因为网络带宽和通信线路不同也会存在不同的网络延时。随着服务器和客户端之间的这些网络延迟增大, 网络延迟可能会导致逻辑问题。比如在快节奏的动作游戏中,在几毫秒的延迟甚至就会导致游戏卡顿的感觉,玩家会觉得很难打到对方玩家或运动的物体。此外除了带宽限制和网络延迟还要考虑网络传输中会有消息丢失的情况。

为了解决网络通信引入的一系列问题,Source引擎在服务器同步时采用了数据压缩和延迟补偿的逻辑,客户端采用了预测运行和插值平滑处理等技术来获得更好的游戏体验。

基本网络模型

服务器以一个固定的时间间隔更新模拟游戏世界。默认情况下,时间步长为15ms,以66.66次每秒的频率更新模拟游戏世界,但不同游戏可以指定更新频率。在每个更新周期内服务器处理传入的用户命令,运行物理模拟步,检查游戏规则,并更新所有的对象状态。每一次模拟更新tick之后服务器会决定是否更新当前时间快照以及每个客户端当前是否需更新。较高的tickrate增加了模拟精度,需要服务器和客户端都有更多可用的CPU和带宽资源。客户通常只能提供有限的带宽。在最坏的情况下,玩家的调制解调器连接不能获得超过5-7KB /秒的流量。如果服务器的数据更新发送频率超过了客户端的带宽处理限制,丢包是不可避免的。因此客户端可以通过在控制台设置接受带宽限制,以告诉服务器其收到的带宽容量。这是客户最重要的网络参数,想要获得最佳的游戏体验的话必须正确的设置此参数。客户端可以通过设置cl_updaterate(默认20)来改变获得快照平的频率,但服务器永远不会发送比tickerate更多的更新或超过请求的客户端带宽限制。服务器管理员可以通过sv_minrate和sv_maxrate(byte/s)限制客户端的上行请求频率。当然快照更新同步频率都受到sv_minupdaterate和sv_maxupdaterate(快照/秒)的限制。

客户端使用与服务端tickrate一样的频率采样操作输入创建用户命令。用户命令基本上是当前的键盘和鼠标状态的快照。客户端不会把每个用户命令都立即发送到服务器而是以每秒(通常是30)的速率发送命令包。这意味着两个或更多个用户的命令在同一包内传输。客户可以增加与的cl_cmdrate命令速率。这可以提高响应速度,但需要更多的出口带宽。

游戏数据使用增量更新压缩来减少网络传输。服务器不会每次都发送一个完整的世界快照,而只会更新自上次确认更新(通过ACK确认)之后所发生的变化(增量快照)。客户端和服务器之间发送的每个包都会带有ACK序列号来跟踪网络数据流。当游戏开始时或客户端在发生非常严重的数据包丢失时, 客户可以要求全额快照同步。

用户操作的响应速度(操作到游戏世界中的可视反馈之间的时间)是由很多因素决定的,包括服务器/客户端的CPU负载,更新频率,网络速率和快照更新设置,但主要是由网络包的传输时间确定。从客户端发送命令到服务器响应, 再到客户端接收此命令对应的服务器响应被称为延迟或ping(或RTT)。低延迟在玩多人在线游戏时有显著的优势。客户端本地预测和服务器的延迟补偿技术可以尽量为网络较差的游戏玩家提供相对公平的体验。如果有良好的带宽和CPU可用,可以通过调整网络设置以获得更好的体验, 反之我们建议保持默认设置,因为不正确的更改可能导致负面影响大于实际效益。

Enitiy插值平滑

通常情况下客户端接收每秒约20个快照更新。如果世界中的对象(实体)直接由服务器同步的位置呈现,物体移动和动画会看起来很诡异。网络通信的丢包也将导致明显的毛刺。解决这个问题的关键是要延迟渲染,玩家位置和动画可以在两个最近收到快照之间的连续插值。以每秒20快照为例,一个新的快照更新到达时大约每50毫秒。如果客户端渲染延迟50毫秒,客户端收到一个快照,并在此之前的快照之间内插(Source默认为100毫秒的插补周期);这样一来,即使一个快照丢失,总是可以在两个有效快照之间进行平滑插值。如下图显示传入世界快照的到达时间:

在客户端接收到的最后一个快照是在tick 344或10.30秒。客户的时间将继续在此快照的基础上基于客户端的帧率增加。下一个视图帧渲染时间是当前客户端的时间10.32减去0.1秒的画面插值延迟10.20。在我们的例子下一个渲染帧的时间是10.22和所有实体及其动画都可以基于快照340和342做正确的插值处理。

既然我们有一个100毫秒的延迟插值,如果快照342由于丢包缺失,插值可以使用快照340和344来进行平滑处理。如果连续多个快照丢失,插值处理可能表现不会很好,因为插值是基于缓冲区的历史快照进行的。在这种情况下,渲染器会使用外推法(cl_extrapolate 1),并尝试基于其已知的历史,为实体做一个基于目前为止的一个简单线性外推。外推只会快照更新包连续丢失(cl_extrapolate_amount)0.25秒才会触发,因为该预测之后误差将变得太大。实体内会插导致100毫秒默认(cl_interp 0.1)的恒定视图“滞后”,就算你在listenserver(服务器和客户端在同一台机器上)上玩游戏。这并不是说你必须提前预判动画去瞄准射击,因为服务器端的滞后补偿知道客户端实体插值并纠正这个误差。

最近Source引擎的游戏有cl_interp_ratioCVaR的。有了这个,你可以轻松,安全地通过设置cl_interp为0,那么增加的cl_updaterate的值(这同时也会受限于服务器tickrate)来减少插补周期。你可以用net_graph 1检查您的最终线性插值。

如果打开sv_showhitboxes,你会看到在服务器时间绘制的玩家包围盒,这意味着他们在前进的线性插值时期所呈现的播放器模式。

输入预测

让我们假设一个玩家有150毫秒的网络延迟,并开始前进。前进键被按下的信息被存储在用户命令,并发送至服务器。用户命令是由移动代码逻辑处理,玩家的角色将在游戏世界中向前行走。这个世界状态的变化传送到所有客户端的下一个快照的更新。因此玩家看到自己开始行动的响应会有150毫秒延迟,这种延迟对于高频动作游戏(体育,设计类游戏)会有明显的延迟感。玩家输入和相应的视觉反馈之间的延迟会产生一种奇怪的,不自然的感觉,使得玩家很难移动或精确瞄准。客户端的输入预测(cl_predict 1)执行是一种消除这种延迟的方法,让玩家的行动感到更即时。与其等待服务器来更新自己的位置,在本地客户端只是预测自己的用户命令的结果。因此,客户端准确运行相同的代码和规则服务器将使用来处理用户命令。预测完成后,当地的玩家会移动到新位置,而服务器仍然可以看到他在老地方。150毫秒后,客户会收到包含基于他早期预测用户命令更改服务器的快照。客户端会将预测位置同服务器的位置对比。如果它们是不同的,则发生了预测误差。这表明,在客户端没有关于其他实体的正确信息和环境时,它处理用户命令。然后,客户端必须纠正自己的位置,因为服务器拥有客户端预测最终决定权。如果cl_showerror 1开启,客户端可以看到,当预测误差发生。预测误差校正可以是相当明显的,并且可能导致客户端的视图不规则跳动。通过在一定时间(cl_smoothtime)逐渐纠正这个错误,错误可以顺利解决。预测误差平滑处理可以通过设置cl_smooth 0来关闭。预测只对本地玩家以及那些只收它影响的实体有效,因为预测的工作原理是使用客户端的操作来预测的。对于其他玩家没法做有效预测, 因为没有办法立即从他们身上得到操作信息。

延迟补偿

比方说,一个玩家在10.5s的时刻射击了一个目标。射击信息被打包到用户命令,该命令通过网络的方式发送至服务器。服务器持续模拟游戏世界,目标可能已经移动到一个不同的位置。用户命令到达服务器时间10.6时服务器就无法检测到射击命中,即使玩家已经在目标准确瞄准。这个错误需要由服务器侧进行延迟补偿校正。延迟补偿系统使所有玩家最近位置的历史一秒。如果在执行用户的命令,服务器预计在命令创建什么时间如下:

命令执行时间=当前服务器时间 - 数据包延迟 - 客户端查看插值

然后服务器会将所有其他玩家回溯到命令执行时的位置,他们在命令执行时间。用户指令被执行,并正确地检测命中。用户命令处理完成后,玩家将会恢复到原来的位置。由于实体插值包含在公式中,可能会导致意外的结果。服务器端可以启用sv_showimpacts 1,显示服务器和客户端射击包围盒位置差异:

该画面在主机上设置延迟200毫秒(net_fakelag设置)时获取的,射击真实命中玩家。红色命中包围盒显示了客户端那里是100毫秒+插补周期前的目标位置。此后,目标继续向左移动,而用户命令被行进到服务器。用户命令到达后,服务器恢复基于所述估计的命令执行时间目标位置(蓝色击中盒)。服务器回溯演绎,并确认命中(客户端看到流血效果)。

因为在时间测量精度的误差客户端和服务器命中包围盒不完全匹配。对于快速移动的物体甚至几毫秒的误差也会导致几英寸的误差。多人游戏击中检测不是基于像素的完美匹配,此外基于tickrate模拟的运动物体的速度也有精度的限制。

既然击中检测服务器上的逻辑如此复杂为什么不把命中检查放在客户端呢?如果在客户端进行命中检查, 玩家位置和像素命中处理检测都可以精准的进行。客户端将只告诉服务器用“打”的消息一直打到什么样的玩家。因为游戏服务器不能信任客户端这种重要决定。因为即使客户端是“干净”的,并通过了Valve反作弊保护,但是报文可以被截获修改然后发送到游戏服务器。这些“作弊代理”可以注入“打”的消息到网络数据包而不被VAC被检测。

网络延迟和滞后补偿可能会引起真实的世界不可能的逻辑。例如,您可能被你看不到的目标所击中。服务器移到你的命中包围盒时光倒流,你仍然暴露给了攻击者。这种不一致问题不能通过一般化的防范解决,因为相对网络包传输的速度。在现实世界中,因为光传播如此之快,你,每个人都在你身边看到同一个世界,所以你才你没有注意到这个问题。

网络视图

Source引擎提供了一些工具来检查您的客户端连接速度和质量。使用net_graph 2可以启用相关的视图。下面的曲线图中,第一行显示每秒当前的渲染的帧,您的平均延迟时间,以及的cl_updaterate的当前值。第二行显示在最后进来的数据包(快照),平均传入带宽和每秒接收的数据包的字节大小。第三行显示刚刚传出的数据包(用户命令)相同的数据。

优化

默认的网络设置是专门为通过互联网连接的游戏服务器设计的。可以适用大多数客户机/服务器的硬件和网络配置工作。对于网络游戏,应该在客户端上进行调整,唯一的控制台变量是“rate”,它定义客户端可用的字节/网络连接带宽。

在一个良好的网络环境中,服务器和所有客户端都具有必要的硬件资源可用,可以调整带宽和更新频率设置,来获得更多的游戏精度。增加tickrate通常可以提高运动和射击精度,但会消耗更多的服务器CPU资源。tickrate 100运行的服务器的负载大概是tickrate 66运行时的约1.5倍, 因此如果CPU性能不足可能会导致严重的计算滞后,尤其是在玩家数量比较多的时候。建议对具有更高tickrate超的游戏服务器预留必要的CPU资源。

如果游戏服务器使用较高tickrate运行时,客户端可以在带宽可用的情况下增加他们的快照更新率(的cl_updaterate)和用户命令速率(的cl_cmdrate)。快照更新速率由服务器tickrate限制,一台服务器无法发送每个时钟周期的一个以上的更新。因此,对于一个tickrate66服务器,为的cl_updaterate最高的客户价值,将是66。如果你增加快照率遇到,你必须再次打开它。与增加的cl_updaterate你也可以降低画面插值延迟(cl_interp)。默认的插值延迟为0.1秒(默认的cl_updaterate为20) 视图内插延迟会导致移动的玩家会比静止不动的玩家更早发现对方。这种效果是不可避免的,但可以通过减小视图内插值延迟来减小。如果双方玩家正在移动,画面滞后会延迟影响双方玩家,双方玩家都不能获利。快照速率和视图延迟插值之间的关系如下:

插补周期= MAX(cl_interp,cl_interp_ratio /cl_updaterate)

可以设置cl_interp为0,仍然有插值的安全量。也可以把cl_updaterate增加,进一步降低你的插补周期,但不会超过更新tickrate(66)或客户端的网络处理能力。

小贴士

不要瞎改终端配置除非你完全确定你在干嘛

如果客户端和服务器没有足够CPU和网络资源,绝大多数所所谓高性能优化都是起负面作用

不要关闭画面插值和延迟补偿

这样并不能代理移动和设计精准度提升

优化设置可能不会对每个客户端都有效

如果是你是在游戏里或者SourceTv里第一视角观看你看到的画面和玩家可能不一样

观战者的画面没有延迟补偿

【版权声明】

原文作者未做权利声明,视为共享知识产权进入公共领域,自动获得授权;